MULTIVARIATE TIME SERIES ANALYSIS: LECTURE NOTES

Version without figures.

9 August 2018

Joseph George Caldwell, PhD (Statistics)

1432 N Camino Mateo, Tucson, AZ 85745-3311 USA

Tel. (001)(520)222-3446, E-mail jcaldwell9@yahoo.com

Website http://www.foundationwebsite.org

Copyright © 2018 Joseph George Caldwell. All rights reserved.

Contents

2. SUMMARY OF SINGLE-VARIABLE UNIVARIATE TIME SERIES ANALYSIS. 4

MAJOR FEATURES OF TIME SERIES. 5

THEORETICAL MODELS: WHITE NOISE, AUTOREGRESSIVE, MOVING AVERAGE, ARMA, ARIMA, SEASONAL 11

HOMOGENEOUS NONSTATIONARY PROCESS. 16

IMPULSE RESPONSE FUNCTION (IRF) 19

STATIONARITY TRANSFORMATIONS, TESTS OF HYPOTHESES. 20

MEASURES OF MODEL EFFICIENCY (INFORMATION CRITERIA): AIC, BIC, HQC. 28

GENERAL FORM OF AN ARIMA MODEL. 30

ALTERNATIVE FORMS OF AN ARIMA MODEL. 31

IMPULSE RESPONSE FUNCTION.. 40

ALTERNATIVE REPRESENTATIONS: UNIQUENESS OF MODEL, JOINT PDF, ACF (NORMAL). 41

ALTERNATIVE REPRESENTATIONS: STATE SPACE, KALMAN FILTER. 42

DETAILED EXAMPLE OF THE DEVELOPMENT OF A SINGLE-VARIABLE UNIVARIATE TIME SERIES MODEL. 43

3. SUMMARY OF MULTIVARIABLE UNIVARIATE MODELS (TRANSFER FUNCTION MODELS, DISTRIBUTED LAG MODELS). 54

A DISCRETE LINEAR TRANSFER FUNCTION MODEL. 59

FEATURES OF TRANSFER FUNCTION MODELS. 61

IDENTIFICATION OF TRANSFER-FUNCTION MODELS, USING THE CROSS-CORRELATION FUNCTION; PREWHITENING 64

ESTIMATION OF PARAMETERS OF TRANSFER-FUNCTION MODELS. 67

MEASURES OF MODEL EFFICIENCY. 70

PROCESS CONTROL; POLICY ANALYSIS. 71

IMPULSE RESPONSE FUNCTION.. 72

PREDICTION (FORECAST ERROR) VARIANCE DECOMPOSITION.. 72

DETAILED EXAMPLE OF DEVELOPMENT AND APPLICATION OF A BIVARIATE TRANSFER-FUNCTION MODEL 72

4. GENERAL MULTIVARIATE TIME SERIES ANALYSIS. 72

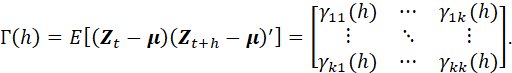

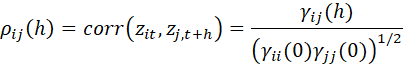

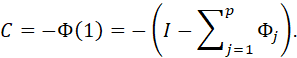

MULTIVARIATE TIME SERIES DESCRIPTORS. 74

NONSTATIONARITY AND COINTEGRATION.. 88

STATIONARITY TRANSFORMATIONS AND TESTS (FOR HOMOGENEITY, COINTEGRATION) 92

MEASURES OF MODEL EFFICIENCY. 97

FORECASTING (UNCONDITIONAL VS. CONDITIONAL) 97

FORECAST ERROR VARIANCE DECOMPOSITION.. 97

ALTERNATIVE REPRESENTATIONS: STATE SPACE, KALMAN FILTER. 99

ALTERNATIVES TO THE GAUSSIAN DISTRIBUTION; COPULAS. 99

DETAILED EXAMPLE OF A MULTIVARIATE TIME SERIES ANALYSIS APPLICATION.. 101

5. TIME SERIES ANALYSIS SOFTWARE. 102

1. OVERVIEW

THIS PRESENTATION IS A SURVEY OF THE BASIC CONCEPTS OF DISCRETE MULTIVARIATE TIME SERIES ANALYSIS. IT BUILDS ON MATERIAL PRESENTED IN OTHER PRESENTATIONS ON DISCRETE UNIVARIATE TIME SERIES ANALYSIS AND CONTINUOUS MULTIVARIATE STATISTICAL ANALYSIS.

THE PRESENTATION PRESENTS KEY RESULTS, BUT NOT MATHEMATICAL PROOFS. MATHEMATICAL DETAILS ARE PRESENTED IN THE FOLLOWING REFERENCES.

BOX, G. E. P., AND GWILYM JENKINS, TIME SERIES ANALYSIS, FORECASTING CONTROL, FIRST EDITION HOLDEN-DAY, 1970, LATEST ADDITION IS 5TH EDITION BY GEORGE E. P. BOX, GWILYM M. JENKINS, GREGORY C. REINSEL AND GRETA M. LJUNG, WILEY, 2016. (IN THIS PRESENTATION, THIS REFERENCE WILL BE REFERRED TO AS BJRL.)

TSAY, RUEY S., MULTIVARIATE TIME SERIES ANALYSIS WITH R AND FINANCIAL APPLICATIONS, WILEY, 2014. (THIS REFERENCE WILL BE REFFERRED TO AS TSAY MSTA.)

LÜTKEPOHL, HELMUT, NEW INTRODUCTION TO MULTIPLE TIME SERIES ANALYSIS, SPRINGER, 2006

HAMILTON, JAMES D., TIME SERIES ANALYSIS, PRINCETON UNIVERSITY PRESS, 1994

THE PRESENTATION INCLUDES FOUR ADDITIONAL MAJOR SECTIONS:

· SUMMARY OF SINGLE-VARIABLE UNIVARIATE TIME SERIES ANALYSIS

· SUMMARY OF MULTIVARIABLE UNIVARIATE TIME SERIES MODELS

· GENERAL MULTIVARIATE TIME SERIES ANALYSIS

· TIME SERIES ANALYSIS SOFTWARE

A DETAILED DESCRIPTION OF UNIVARIATE TIME SERIES MODELS IS PRESENTED IN THE TIMES TECHNICAL MANUAL, POSTED AT INTERNET WEBSITE http://www.foundationwebsite.org/TIMESVol1TechnicalBackground.pdf.

2. SUMMARY OF SINGLE-VARIABLE UNIVARIATE TIME SERIES ANALYSIS

THIS PRESENTATION BEGINS WITH A REVIEW OF CONCEPTS FROM SINGLE-VARIABLE UNIVARIATE TIME SERIES ANALYSIS (OR SCALAR TIME SERIES ANALYSIS). IN THIS SECTION, ATTENTION FOCUSES ON A SINGLE SCALAR RANDOM VARIABLE.

REFERENCES TREATING SINGLE-VARIABLE UNIVARIATE TIME SERIES INCLUDE:

BOX, GEORGE E. P., GWILYM M. JENKINS, GREGORY C. REINSEL AND GRETA M. LYUNG, TIME SERIES ANALYSIS, FORECASTING AND CONTROL, 5TH ED., WILEY, 2016

CRYER, JONATHAN D. AND KUNG-SIK CHAN, TIME SERIES ANALYSIS WITH APPLICATIONS IN R, 2ND ED., SPRINGER, 2008

MAJOR FEATURES OF TIME SERIES

A TIME SERIES IS A SET OF RANDOM VARIABLES HAVING A TIME INDEX: {X(t), tєT}, OR X1, X2,…,Xt.,,,. A TIME SERIES IS CALLED DISCRETE OR CONTINUOUS ACCORDING AS THE INDEX SET T IS DISCRETE OR CONTINUOUS. FOR THIS PRESENTATION, AND FOR MOST APPLICATIONS, THE TIME INDEX IS THE SET OF INTEGERS, 1, 2, …, AND THE TIMES TO WHICH THEY CORRESPOND ARE EQUALLY SPACED AND SUCCESSIVE IN TIME (E.G., HOURLY, DAILY, OR MONTHLY OBSERVATIONS OF A PHENOMENON OR PROCESS, SUCH AS TEMPERATURE OR STOCK PRICE).

IN GENERAL, THE RANDOM VARIABLE Xt MAY BE A VECTOR. IN THIS SECTION, IT IS A SINGLE-COMPONENT VECTOR, I.E., A SCALAR.

Figure: Example of a time series.

TIME SERIES DESCRIPTORS: MEAN, VARIANCE, COVARIANCE, ACF, PACF, SDF, STATIONARITY, HOMOGENEOUS NONSTATIONARITY, HETEROSCEDASTICITY

A STRICTLY STATIONARY

(OR STRONGLY STATIONARY) TIME SERIES (OR STOCHASTIC PROCESS) IS ONE FOR

WHICH THE JOINT DISTRIBUTION OF THE RANDOM VARIABLES ![]() IS THE SAME AS

THE DISTRIBUTION OF THE RANDOM VARIABLES

IS THE SAME AS

THE DISTRIBUTION OF THE RANDOM VARIABLES ![]() FOR ANY VALUE

OF k. LET US DENOTE THIS COMMON DISTRIBUTION AS F(X). FOR THIS PRESENTATION,

WE SHALL ASSUME THAT THE RANDOM VARIABLE IS CONTINUOUS, WITH DENSITY FUNCTION

f(X).

FOR ANY VALUE

OF k. LET US DENOTE THIS COMMON DISTRIBUTION AS F(X). FOR THIS PRESENTATION,

WE SHALL ASSUME THAT THE RANDOM VARIABLE IS CONTINUOUS, WITH DENSITY FUNCTION

f(X).

CONSIDERING THE CASE m = 1, THE DEFINITION OF STRICT STATIONARITY IMPLIES THAT THE MEAN OF THE TIME SERIES IS THE SAME FOR ALL VALUES OF t, AND THE VARIANCE IS ALSO THE SAME FOR ALL VALUES OF t.

CONSIDERING THE CASE m = 2, THE DEFINITION OF STRICT STATIONARITY IMPLIES THAT THE COVARIANCE OF ANY TWO RANDOM VARIABLES OF THE TIME INDEX SET, Xt AND Xt+k, IS THE SAME FOR ALL VALUES OF t.

FOR A STRICTLY STATIONARY SERIES, THE MEAN MAY BE ESTIMATED IN TWO WAYS: BY OBSERVING A SIMPLE RANDOM SAMPLE OF Xt AT A FIXED TIME t AND AVERAGING; OR BY OBSERVING A SAMPLE OF Xt FOR DIFFERENT TIMES t AND AVERAGING. IN REALITY, IT MAY BE IMPOSSIBLE OR IMPRACTICAL TO TAKE A SAMPLE AT A FIXED TIME. THESE TWO MEANS ARE CALLED THE TIME AVERAGE AND THE ENSEMBLE AVERAGE. FOR A STRICTLY STATIONARY TIME SERIES, THESE TWO AVERAGES ARE THE SAME. THIS PROPERTY IS CALLED ERGODICITY, AND THE TIME SERIES IS SAID TO BE ERGODIC. IN WHAT FOLLOWS, WE WILL ESTIMATE THE MEAN OF THE TIME SERIES (AND OTHER PROPERTIES, SUCH AS THE VARIANCE AND COVARIANCE) USING THE TIME AVERAGE.

AN OBSERVED TIME SERIES IS SAID TO BE A SINGLE REALIZATION OF AN UNDERLYING STOCHASTIC PROCESS (ABSTRACT OR REAL) THAT IS SAID TO GENERATE THE REALIZIATION. CONCEPTUALLY, THE UNDERLYING STOCHASTIC PROCESS COULD CONCEIVABLY GENERATE MANY ALTERNATIVE TIME SERIES, BUT IN MOST PRACTICAL APPLICATIONS ONLY ONE SERIES IS OBSERVED (SINCE TIME FLOWS AND WE MAY HAVE ONLY ONE CHANCE TO MAKE AN OBSERVATION AT A SPECIFIED TIME). THE UNDERLYING STOCHASTIC PROCESS IS ALSO CALLED THE DATA GENERATING PROCESS (DGP).

THE TERMS TIME SERIES AND STOCHASTIC PROCESS ARE USED SOMEWHAT INTERCHANGEABLY. THE TERM TIME SERIES IS USED MORE IN REFERRING TO A PARTICULAR OBSERVED REALIZATION OF A STOCHASTIC PROCESS, AND THE TERM STOCHASTIC PROCESS FOR THE THEORETICAL (UNREALIZED, MATHEMATICAL, CONCEPTUAL) MODEL THAT GENERATES THE REALIZATION.

NOTE THAT A SAMPLE OF OBSERVATIONS OVER TIME IS NOT, IN GENERAL, A SIMPLE RANDOM SAMPLE. THE MEMBERS OF A SAMPLE OF OBSERVATIONS FROM A REALIZATION OF A STOCHASTIC PROCESS ARE TYPICALLY CORRELATED. IF THEY ARE GENERATED BY AN UNDERLYING CONTINUOUS PROCESS, THE CORRELATION BETWEEN NEARBY OBSERVATIONS TYPICALLY INCREASES AS THE TIME DISTANCE BETWEEN THE OBSERVATIONS DECREASES. FOR THIS REASON, THE PRECISION OF A TIME AVERAGE TYPICALLY DIFFERS FROM THAT OF AN ENSEMBLE AVERAGE OF THE SAME SAMPLE SIZE.

STRICT STATIONARITY IS DIFFICULT TO ESTABLISH. A MORE USEFUL CONCEPT OF STATIONARITY IS WEAK STATIONARITY. A TIME SERIES IS WEAKLY STATIONARY IF THE MEAN AND VARIANCE ARE CONSTANT OVER TIME (I.E., THE MEAN AND VARIANCE OF Xt ARE CONSTANT FOR ALL VALUES OF THE INDEX t), AND THE COVARIANCE BETWEEN ANY TWO VARIABLE Xt AND Xt+k IS CONSTANT FOR A SPECIFIED VALUE OF k. (WEAK STATIONARITY IS ALSO CALLED SECOND-ORDER STATIONARITY OR COVARIANCE STATIONARITY.)

IN THIS PRESENTATION WE WILL BE WORKING WITH WEAK STATIONARITY, NOT STRICT STATIONARITY.

EXAMPLES:

Note: This version of the presentation does not include figures.

Figure: Example of stationary time series.

Figure: Example of a nonstationary time series exhibiting explosive behavior.

Figure: Example of nonseasonal homogeneous nonstationary time series.

Figure: Example of seasonal homogeneous nonstationary time series.

Figure: Example of heteroscedastic nonstationary time series.

THE MEAN OF A STATIONARY STOCHASTIC PROCESS IS:

![]()

THE VARIANCE IS:

![]()

AND THE AUTOCOVARIANCE AT LAG k IS:

![]()

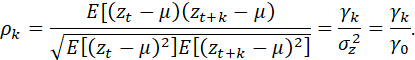

THE AUTOCORRELATION AT LAG k IS:

THE SEQUENCE OF AUTOCOVARIANCES FOR LAGS k = 1, 2, … IS CALLED THE AUTOCOVARIANCE FUNCTION, AND THE SEQUENCE OF AUTOCORRELATIONS FOR LAGS k = 1, 2,… IS CALLED THE AUTOCORRELATION FUNCTION. THE AUTOCORRELATION FUNCTION IS DENOTED AS ACF.

THE COSINE FOURIER TRANSFORM OF THE AUTOCOVARIANCE FUNCTION IS CALLED THE POWER SPECTRUM (OR SIMPLY, THE SPECTRUM).

![]()

THE POWER SPECTRUM IS A MEASURE OF THE DISTRIBUTION OF THE VARIANCE OF A TIME SERIES OVER A RANGE OF FREQUENCIES. (SEE BJRL FOR DISCUSSION.)

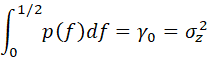

INTEGRATING THE PRECEDING FUNCTION OVER ITS RANGE, WE OBTAIN:

THE POWER SPECTRUM CONTAINS ALL OF THE INFORMATION IN THE AUTOCOVARIANCE FUNCTION; EITHER ONE MAY BE CONSTRUCTED FROM THE OTHER. THE POWER SPECTRUM IS OF DIRECT INTEREST IN PHYSICAL APPLICATIONS INVOLVING DETERMINISTIC FREQUENCIES, BUT ALSO OF INTEREST FOR TESTING THE ADEQUACY OF TENTATIVE MODELS IN GENERAL.

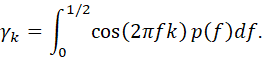

THE FORMULA FOR THE AUTOCOVARIANCE FUNCTION IN TERMS OF THE POWER SPRECTRUM IS:

THE SPECTRAL DENSITY FUNCTION IS THE POWER SPECTRUM NORMALIZED BY DIVIDING BY THE VARIANCE:

![]()

THE SPECTRAL DENSITY FUNCTION HAS THE SAME PROPERTIES AS AN ORDINARY PROBABILITY DENSITY FUNCTION, I.E., IT IS POSITIVE AND INTEGRATES TO ONE.

LET x = (x1,…,xn)’ DENOTE A SEQUENCE OF n OBSERVATIONS FROM A TIME SERIES REALIZATION (OBSERVATION) OF A STOCHASTIC PROCESS, WHERE n DENOTES THE NUMBER OF TIME POINTS IN THE OBSERVED SEQUENCE.

(IN THIS PRESENTATION WE SHALL USUALLY DENOTE ROW VECTORS, SUCH AS A SEQUENCE OF OBSERVED VALUES OF A TIME SERIES, USING PRIMED BOLD-FACE LETTERS. IN GENERAL, ABSTRACT (CONCEPTUAL, THEORETICAL) RANDOM VARIABLES WILL BE DENOTED BY UPPER-CASE LETTERS AND REALIZED VALUES BY LOWER-CASE LETTERS. THIS PRACTICE IS NOT UNIVERSAL, HOWEVER, AND WE MAY DEPART FROM IT IN ORDER TO FOLLOW THE NOTATION OF A CITED AUTHOR (AND REPRESENT ABSTRACT RANDOM VARIABLES IN EITHER UPPOR OR LOWER CASE). VECTORS ARE SHOWN IN BOLDFACE FONT. MATRICES ARE SHOWN IN NON-BOLDFACE FONT. (THIS LAST CONVENTION IS NOT STANDARD, AND WILL BE CHANGED IN A SUBSEQUENT VERSION.)

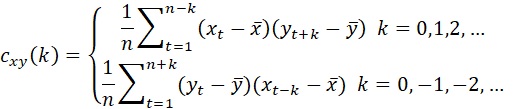

THE SAMPLE ESTIMATES OF THE MEAN, VARIANCE AND AUTOCOVARIANCE AT LAG k ARE:

![]()

![]()

AND

![]()

NOTE THAT IT IS CUSTOMARY

IN TIME SERIES ANALYSIS TO USE A DIVISOR n INSTEAD OF n-1 IN THE EXPRESSION ![]() FOR THE ESTIMATED MEAN. IF THE DIVISOR n-1 IS USED,

THE SYMBOL

FOR THE ESTIMATED MEAN. IF THE DIVISOR n-1 IS USED,

THE SYMBOL ![]() IS USED.

IS USED.

THE AUTOCORRELATION AT LAG k IS ESTIMATED AS:

![]()

AUTOCOVARIANCE AND AUTOCORRELATION MATRICES MAY BE FORMED FOR THE VARIATES COMPRISING A TIME SERIES, BUT, FOR A STATIONARY SERIES, THEY ARE HIGHLY REDUNDANT, SINCE ALL ENTRIES ALONG ANY DIAGONAL PARALLELLING THE TOP-LEFT TO LOWER-RIGHT PRINCIPAL DIAGONAL ARE IDENTICAL. FOR THIS REASON, FOR UNIVARIATE TIME SERIES, ATTENTION FOCUSES ON THE AUTOCOVARIANCE FUNCTION AND AUTOCORRELATION FUNCTION, NOT ON THE FULL AUTOCOVARIANCE AND AUTOCORRELATION MATRICES FOR A LONG TIME SERIES. AUTOCOVARIANCE AND AUTOCORRELATION MATRICES ARE OF INTEREST, HOWEVER, FOR SHORT SERIES. THE AUTOCORRELATION FUNCTION IS SIMPLY THE LIST OF ENTRIES ALONG THE DIAGONAL (OF THE AUTOCORRELATION MATRIX) GOING FROM UPPER RIGHT TO LOWER LEFT.

THE SAMPLE ESTIMATES OF THE POWER SPECTRUM AND SPECTRAL DENSITY FUNCTION OBTAINED BY INSERTING SAMPLE ESTIMATES FOR AUTOCOVARIANCES OR AUTOCORRELATIONS ARE POOR (INCONSISTENT AND OF HIGH VARIANCE). THE REASON FOR THIS IS THAT THE ESTIMATES OF AUTOCOVARIANCES AND AUTOCORRELATIONS AT HIGH LAGS ARE BASED ON A SMALL SAMPLE SIZES, AND THEIR VARIANCES REMAIN HIGH EVEN AS THE TOTAL SAMPLE SIZE INCREASES.

USEFUL ESTIMATES ARE OBTAINED BY WEIGHTING TO DIMINISH THE CONTRIBUTION TO THE ESTIMATE FROM LOWER FREQUENCIES (I.E., FROM LONG LAGS, FOR WHICH THE AUTOCOVARIANCE AND AUTOCORRELATION ESTIMATES ARE BASED ON FEW OBSERVATIONS). A SMOOTHED ESTIMATE OF THE POWER SPECTRUM IS:

![]()

WHERE THE λk ARE WEIGHTS CALLED A LAG WINDOW.

THIS PRESENTATION PRESENTS VERY LITTLE MATERIAL ON THE TIME SERIES ANALYSIS IN THE FREQUENCY DOMAIN. REFERENCES ON THIS TOPIC INCLUDE THE FOLLOWING:

1. Jenkins, Gwilym M. and Donald G. Watts, Spectral Analysis and its applications, Holden-Day, 1968

2. Priestley, M. B., Spectral Analysis and Time Series, Academic Press, 1981

3. Harris, Bernard, ed., Spectral Analysis of Time Series, Wiley, 1967

THE PARTIAL AUTOCORRELATION COEFFICIENT IS DEFINED AS THE k-th COEFFICIENT, Økk, OF AN AUTOREGRESSIVE REPRESENTATION OF ORDER k. CONSIDERED AS A FUNCTION OF k, THIS QUANTITY IS CALLED THE PARTIAL AUTOCORRELATION FUNCTION (PACF), UNLIKE THE ACF, THE PACF DOES NOT CHARACTERIZE A STOCHASTIC PROCESS. IT IS A DIAGNOSTIC TOOL USED TO HELP IDENTIFY THE ORDER OF A STOCHATIC PROCESS. FOR AN AR(p) PROCESS THE PACF WILL BE NONZERO FOR k <=p AND ZERO FOR k > p.

FOR AN AR(p) PROCESS, THE ACF WILL DIE OFF, I.E., TEND TO DECREASE AS THE LAG INCREASES. FOR A MA(q) PROCESS, THE ACF WILL CUT OFF AT THE ORDER, q, OF THE PROCESS. IN CONTRAST, FOR AN AR(p) PROCESS THE PACF WILL CUT OFF AT THE ORDER, p, OF THE PROCESS, AND FOR AN MA(q) PROCESS THE PACF WILL DIE OFF. THE ACF AND PACF ARE HENCE USEFUL TOOLS IN ASSESSING CANDIDATE ORDERS FOR AR AND MA MODELS. FOR ARMA PROCESSES, THE ACF AND PACF WILL TEND TO DIE OFF AFTER A CERTAIN LAG.

THEORETICAL MODELS: WHITE NOISE, AUTOREGRESSIVE, MOVING AVERAGE, ARMA, ARIMA, SEASONAL

THERE ARE A NUMBER OF TYPES OF STOCHASTIC PROCESS MODELS, OR TIME SERIES MODELS, THAT ARE HIGHLY USEFUL FOR REPRESENTING REAL-WORLD PHENOMENA. SOME OF THEM ARE STATIONARY, AND SOME ARE A PARTICULAR TYPE OF NONSTATIONARY, CALLED HOMOGENEOUS NONSTATIONARY. THESE MODELS WILL NOW BE DESCRIBED.

EXAMPLES OF EACH MODEL WILL BE PRESENTED. THE MODEL WILL BE REPRESENTED BY THE MODEL EQUATION, A SAMPLE TIME SERIES, THE ACF, THE PACF AND THE SPECTRAL DENSITY FUNCTION.

IN GENERAL, A STOCHASTIC PROCESS MAY BE REPRESENTED BY THE JOINT PROBABILITY DISTRIBUTION FUNCTION OR THE MODEL. SINCE A NORMAL PROCESS (I.E., ONE IN WHICH THE MODEL ERROR TERMS ARE NORMALLY DISTRIBUTED) IS CHARACTERIZED (DEFINED, COMPLETELY SPECIFIED) BY ITS MEAN, VARIANCES AND COVARIANCES, A NORMAL PROCESS MAY BE REPRESENTED BY ITS MEAN, VARIANCE AND AUTOCOVARIANCE FUNCTION (OR POWER SPECTRUM). IN WHAT FOLLOWS, WE WILL ASSUME THAT THE MODEL RESIDUALS HAVE MEAN ZERO AND CONSTANT VARIANCE σ2. IN THIS CASE, A NORMAL PROCESS IS CHARACTERIZED BY THE ACF OR SPECTRAL DENSITY FUNCTION (IN ADDITION TO THE VARIANCE, σ2).

STATIONARY PROCESSES

WHITE NOISE PROCESS

A WHITE NOISE

PROCESS IS A SEQUENCE OF INDEPENDENT AND IDENTICALLY DISTRIBUTED RANDOM

VARIABLES. IT IS USUALLY DENOTED AS A SEQUENCE a1, a2,

…, at ,…. IT IS USUALLY ASSUMED TO HAVE MEAN ZERO. THE VARIANCE IS

DENOTED BY ![]()

MODEL:

![]()

Figures: Sample time series, ACF, PACF, SDF.

IN THE FOLLOWING, WE SHALL USE THE SYMBOL at TO DENOTE A WHITE NOISE PROCESS (I.E., A SEQUENCE OF UNCORRELATED RANDOM VARIABLES WITH MEAN ZERO AND VARIANCE σ2).

MOVING AVERAGE (MA) PROCESS

GENERAL MODEL:

![]()

WHERE

![]()

IN ORDER FOR THIS MODEL TO REPRESENT REALISTIC PROCESSES, IT IS NECESSARY TO RESTRICT THE MODEL SUCH THAT THE ROOTS OF THE EQUATION

![]()

ARE LOCATED OUTSIDE THE UNIT CIRCLE. THIS CONDITION IS CALLED THE INVERTIBILITY PROPERTY. FOR INVERTIBLE PROCESSES, THE INFLUENCE OF PAST OBSERVATIONS TENDS TO DIMINISH AS THE TIME INTERVAL INCREASES.

SAMPLE MODEL:

![]()

OR

![]()

Figures: Sample time series, ACF, PACF, SDF.

AUTOREGRESSIVE (AR) PROCESS

GENERAL MODEL:

![]()

OR

![]()

OR

![]()

OR

![]()

WHERE

![]()

IN ORDER FOR THE PROCESS TO BE STATIONARY, IT IS NECESSARY THAT ALL ROOTS OF THE EQUATION

![]()

ARE LOCATED OUTSIDE THE UNIT CIRCLE.

SAMPLE MODEL:

![]()

OR

![]()

Figures: Sample time series, ACF, PACF, SDF.

AUTOREGRESSIVE MOVING AVERAGE (ARMA) PROCESS

THE AUTOREGRESSIVE AND MOVING AVERAGE PROCESSES MAY BE COMBINED, INTO WHAT IS CALLED AN AUTOREGRESSIVE – MOVING AVERAGE PROCESS.

GENERAL MODEL:

![]()

OR

![]()

OR

![]()

OR

![]()

WHERE

![]()

AND

![]()

SAMPLE MODEL:

![]()

OR

![]()

Figures: Sample time series, ACF, PACF, SDF.

SEASONAL PROCESS

SUPPOSE THAT OBSERVATIONS OCCURRING AT AN INTERVAL OF s TIME UNITS APART ARE RELATED. FOR EXAMPLE, MONTHLY SALES OF A PRODUCT MAY FOLLOW AN ANNUAL PATTERN IN WHICH OBSERVATIONS 12 MONTHS APART ARE CORRELATED. A USEFUL MODEL IN THIS SITUATION IS A MULTIPLICATIVE SEASONAL MODEL.

GENERAL MODEL (MULTIPLICATIVE SEASONAL ARMA MODEL):

![]()

WHERE THE PERIOD OF THE SEASON IS s, THE INTERVAL-s BACKSHIFT OPERATOR IS DEFINED BY

![]()

![]()

AND

![]()

AS IN THE CASE OF NONSEASONAL MODELS, IT IS REQUIRED THAT THE ROOTS OF THE ΦP AND ΘQ POLYNOMIALS BE LOCATED OUTSIDE THE UNIT CIRCLE.

SAMPLE MODEL:

![]()

OR

![]()

Figures: Sample time series, ACF, PACF, SDF.

HOMOGENEOUS NONSTATIONARY PROCESS

THE MODELS DESCRIBED ABOVE, IN WHICH THE ROOTS OF THE PHI POLYNOMIALS ARE OUTSIDE THE UNIT CIRCLE, REPRESENT STATIONARY PROCESSES. IF IT IS ALLOWED FOR THE ROOTS OF THE PHI POLYNOMIALS TO BE ON THE UNIT CIRCLE, THE PROCESS IS NONSTATIONARY, BUT IN A PARTICULAR WAY. THE LEVEL OF THE PROCESS MAY WANDER, BUT EXPLOSIVE BEHAVIOR DOES NOT OCCUR.

IF THE ROOTS OF THE PHI POLYNOMIALS ARE IMAGINARY, THE PROCESS WILL EXHIBIT RANDOM PERIODIC BEHAVIOR THAT APPEARS SINUSOIDAL IN NATURE. IF THE ROOTS ARE REAL, THE PROCESS LEVEL WANDERS. PERIODIC BEHAVIOR MAY BE PRESENT, BUT IT IS NOT SINUSOIDAL IN NATURE.

THE TYPE OF NONSTATIONARY

BEHAVIOR EXHIBITED BY SERIES HAVING ROOTS ON THE UNIT CIRCLE IS CALLED HOMOGENEOUS

NONSTATIONARY BEHAVIOR. THE MOST WIDELY USED MODELS OF THIS SORT ARE ONES

IN WHICH THE PHI POLYNOMIAL HAS FACTORS OF THE FORM ![]() = (1 – B) OR

= (1 – B) OR ![]() = (1 – Bs)

(THAT IS, THE ROOTS ARE REAL). THESE MODELS HAVE THE FORM

= (1 – Bs)

(THAT IS, THE ROOTS ARE REAL). THESE MODELS HAVE THE FORM

![]()

IN MOST APPLICATIONS, THERE ARE ONLY ONE OR TWO PHI PARAMETERS IN EACH PHI POLYNOMIAL, ONE OR TWO THETA PARAMETERS IN EACH THETA PARAMETER, AND THE VALUES OF d AND D ARE USUALLY ZERO OR ONE.

NOTATION: THE PRECEDING MODEL IS REFERRED TO AS A BOX-JENKINS

MODEL WITH PARAMETERS (p,d,q) x (P,D,Q)s (OR OF ORDER (p,d,q)

x (P,D,Q)s). SOME AUTHORS USE THE SYMBOL Δ TO DENOTE THE

FIRST DIFFERENCE (1 – B) INSTEAD OF ![]() . IN ECONOMETRICS, IT IS STANDARD PRACTICE TO USE L

TO DENOTE THE BACKSHIFT OPERATOR (INSTEAD OF B).

. IN ECONOMETRICS, IT IS STANDARD PRACTICE TO USE L

TO DENOTE THE BACKSHIFT OPERATOR (INSTEAD OF B).

A MODEL HAVING THE

DIFFERENCE TERMS (![]() TERMS) IS CALLED AN INTEGRATED PROCESS. A

MODEL HAVING AN AUTOREGRESSIVE (PHI) POLYNOMIAL, DIFFERENCE TERMS, AND A MOVING

AVERAGE (THETA) POLYNOMIAL IS CALLED AN AUTOREGRESSIVE INTEGRATED MOVING

AVERAGE (ARIMA) PROCESS.

TERMS) IS CALLED AN INTEGRATED PROCESS. A

MODEL HAVING AN AUTOREGRESSIVE (PHI) POLYNOMIAL, DIFFERENCE TERMS, AND A MOVING

AVERAGE (THETA) POLYNOMIAL IS CALLED AN AUTOREGRESSIVE INTEGRATED MOVING

AVERAGE (ARIMA) PROCESS.

EXAMPLE:

![]()

OR

![]()

Figures: Sample time series.

EXAMPLE:

![]()

OR

![]()

Figures: Sample time series.

EXAMPLE:

![]()

OR

![]()

Figures: Sample time series.

ALTHOUGH DIFFERENCING IS A WIDELY USED METHOD FOR TRANSFORMING TO ACHIEVE STATIONARITY, IT IS APPROPRIATE ONLY IF THE UNDERLYING STOCHASTIC PROCESS IS REASONABLY REPRESENTED BY AN ARIMA MODEL HAVING A REAL UNIT ROOT. FOR A SERIES THAT FLUCTUATES AROUND A DETERMINISTIC TREND, APPLYING THIS TRANSFORMATION TO ACHIEVE STATIONARITY WOULD BE A MISTAKE. DIFFERENCING WOULD IN FACT REMOVE THE TREND, BUT IT WOULD BE AN INCORRECT SPECIFICATION, AND WOULD IN FACT INTRODUCE A UNIT ROOT INTO THE TRANSFORMED DATA. A FORECASTING MODEL BASED ON THIS MODEL WOULD PRODUCE REASONABLE LEAD-ONE FORECASTS, BUT THE FORECASTS BEYOND THAT POINT WOULD HAVE MUCH LARGER ERROR VARIANCES THAN FORECASTS BASED ON THE CORRECT MODEL.

Figure: Deterministic trend plus white noise series.

IMPULSE RESPONSE FUNCTION (IRF)

AS MENTIONED, FOR A (WEAKLY)

STATIONARY PROCESS, THE ROOTS OF THE PHI AND THETA POLNOMIALS ARE OUTSIDE THE UNIT

CIRCLE. IN THIS CASE, THE LINEAR OPERATOR ![]() (B) MAY BE INVERTED, AND THE ARMA MODEL

(B) MAY BE INVERTED, AND THE ARMA MODEL

![]()

MAY BE WRITTEN AS

![]()

WHERE

![]()

THIS REPRESENTATION IS CALLED A WOLD DECOMPOSITION, AND THE SERIES Ψ1, Ψ2,… IS CALLED THE IMPULSE RESPONSE FUNCTION (IRF). THE Ψ SERIES IS FINITE FOR A PURE MOVING AVERAGE PROCESS, AND INFINITE FOR AN AUTOREGRESSIVE MODEL (EITHER AR OR ARMA).

THE FUNCTION Ψk SHOWS THE AVERAGE EFFECT OF A UNIT INCREASE IN THE MODEL INPUT at, ON THE MODEL OUTPUT, zt+k. NOTE THAT THE IMPULSE RESPONSE FUNCTION REPRESENTS THE EFFECT OF A UNIT CHANGE IN THE INPUT (at) WITH THE PROCESS FUNCTIONING AS SPECIFIED BY A CAUSAL MODEL OF THE STOCHASTIC PROCESS UNDERLYING THE MODEL. IT REPRESENTS THE EFFECT OF AN OBSERVED UNIT CHANGE IN THE INPUT IF THE MODEL ERROR TERMS OF UNDERLYING PROCESS REPRESENT OBSERVED CHANGES; IT REPRESENTS AN ESTIMATE OF THE EFFECT OF A FORCED CHANGE IN THE INPUT IF THE MODEL ERROR TERMS OF THE UNDERLYING MODEL REPRESENT FORCED CHANGES.

WHILE THE IMPULSE RESPONSE FUNCTION CHARACTERIZES A TIME SERIES, IT IS NOT USED A LOT IN ANALYSIS OF UNIVARIATE TIME SERIES MODELS THAT INCLUDE NO EXPLANATORY VARIABLES, IN WHICH CASE IT INDICATES THE RESPONSE TO CHANGES IN THE MODEL ERROR TERMS. IT IS USED MORE IN ANALYSIS OF MODELS CONTAINING EXPLANATORY VARIABLES, TO SHOW THE RESPONSE (OF AN OUTPUT VARIABLE) TO CHANGES IN THEM.

THE SUM OF ALL OF THE IMPULSE RESPONSES, WHICH IS THE TOTAL EFFECT OF A UNIT INCREASE ON ALL FUTURE OUTPUTS,

![]()

IS CALLED THE TOTAL MULTIPLIERS OR LONG-RUN EFFECTS. THE SUM OF THE IMPULSE RESPONSES UP TO A VALUE n

![]()

IS CALLED THE ACCUMULATED RESPONSE OVER n PERIODS, OR THE n-th INTERIM MULTIPLIERS.

ANALYSIS OF THE IMPULSE RESPONSE FUNCTION IS REFERRED TO AS MULTIPLIER ANALYSIS IN ECONOMICS.

THE PRECEDING DISCUSSION CONSIDERS THE IMPULSE RESPONSE FUNCTION FOR A STATIONARY PROCESS. THE DEFINITION OF THE IMPULSE RESPONSE FUNCTION IS THE SAME FOR HOMOGENEOUS NONSTATIONARY PROCESSES, BUT THE PROCESS CANNOT BE REPRESENTED AS A CONVERGENT SERIES, AS SHOWN ABOVE. FORMULAS FOR THE IMPULSE RESPONSE FUNCTION (I.E., THE Ψ’s) IN THE HOMOGENEOUS NONSTATIONARY CASE WILL BE PRESENTED LATER.

STATIONARITY TRANSFORMATIONS, TESTS OF HYPOTHESES

THE PRECEDING MODELS – THE CLASS OF AUTOREGRESSIVE INTEGRATED MOVING AVERAGE MODELS – CAN REPRESENT A WIDE RANGE OF PHENOMENA, AND THE MODELS MAY BE EITHER STATIONARY OR NONSTATIONARY. IN ORDER TO USE ONE OF THESE MODELS IN A PARTICULAR APPLICATION, IT MUST BE DEMONSTRATED THAT THE OBSERVED TIME SERIES CAN REASONABLY BE REPRESENTED BY A MEMBER OF THIS CLASS.

AN ARIMA MODEL IS APPROPRATE IF THE OBSERVED DATA SERIES CAN BE DEMONSTRATED TO BE STATIONARY, OR IF IT CAN BE CONVERTED TO A STATIONARY TIME SERIES BY DIFFERENCING (OR, MORE GENERALLY, BY TRANSFORMING USING ANY POLYNOMIAL FILTER FOR WHICH THE ROOTS ARE ON THE UNIT CIRCLE).

A STANDARD PROCEDURE FOR TRANSFORMING A NONSTATIONARY STOCHASTIC PROCESS TO A STATIONARY ONE IS DIFFERENCING. DIFFERENCING IS APPROPRIATE IF THE OBSERVED SERIES EXHIBITS HOMOGENEOUS NONSTATIONARY BEHAVIOR OR SEASONALITY. SUCH BEHAVIOR IS EASILY RECOGNIZED BY VISUAL INSPECTION.

A STATISTICAL TEST OF THE HYPOTHESIS THAT A PROCESS IS NONSTATIONARY VS. THE ALTERNATIVE THAT IT IS STATIONARY IS THE DICKEY-FULLER TEST. (SEE ECONOMETRIC ANALYSIS 7th ED. BY WILLIAM H. GREENE (PEARSON EDUCATION / PRENTICE HALL, 2012) FOR DISCUSSION.) THE DICKEY-FULLER TEST IS A LITTLE COMPLICATED, SINCE THE SAMPLING DISTRIBUTION OF THE TEST STATISTIC DEPENDS ON THE NATURE OF THE NONSTATIONARITY (I.E., ON THE TRUE PROCESS). THERE IS NOT JUST ONE DICKEY-FULLER TEST, BUT A NUMBER OF THEM, FOR DIFFERENT SITUATIONS (E.G., AN APPARENT RANDOM WALK, OR A RANDOM WALK WITH DRIFT, OR A RANDOM WALK WITH TREND). (THE SAMPLING DISTRIBUTION IS NOT AVAILABLE IN CLOSED FORM, EVEN FOR SIMPLE HOMOGENEOUS NONSTATIONARY PROCESSES SUCH AS RANDOM WALKS.) FOR SHORT TIME SERIES, THE TESTS ARE NOT RELIABLE.

A STATISTICAL TEST OF THE HYPOTHESIS OF NONSTATIONARITY (OR STATIONARITY) IS CALLED A “UNIT ROOT” TEST, SINCE IT IS A TEST OF WHETHER THE AUTOREGRESSIVE POLYNOMIAL OF AN AUTOREGRESSIVE PROCESS HAS A ROOT ON (OR OUTSIDE) THE UNIT CIRCLE.

IN ECONOMETRIC APPLICATIONS, MUCH ATTENTION HAS BEEN FOCUSED ON WHETHER DIFFERENCING IS REQUIRED TO ACHIEVE STATIONARITY, VS. THE USE OF A MODEL THAT HAS A ROOT OF THE AUTOREGRESSIVE POLYNOMIAL JUST OUTSIDE THE UNIT CIRCLE, E.G., (1 – B) VS. (1 - .95B). IT SHOULD BE NOTED THAT THE BOX-JENKINS (ARIMA) MODELS ARE USED PRIMARILY IN SHORT-TERM FORECASTING, AND WHICH OF THESE REPRESENTATIONS IS SELECTED WILL MAKE LITTLE DIFFERENCE IN THE ACCURACY OF SHORT-TERM FORECASTS. THE LONG-TERM BEHAVIOR OF THESE MODEL ALTERNATIVES IS SUBSTANTIALLY DIFFERENT, HOWEVER, SINCE THE EFFECT OF A SHOCK AT A PARTICULAR TIME DIES OUT WITH THE LATTER MODEL (1 - .95B) BUT NOT THE FORMER MODEL (1 – B). FOR SHORT TIME SERIES, THE POWER OF THE DICKEY-FULLER TEST TO DISCRIMINATE BETWEEN THESE TWO MODEL CHOICES WILL BE LOW. TO CREDIBLY MAKE SUCH AN ASSESSMENT WOULD REQUIRE A QUITE LONG TIME SERIES.

IN PRACTICE, ONLY ONE OR TWO DIFFERENCES IS NECESSARY TO ACHIEVE STATIONARITY FOR A NONSTATIONARY SERIES. FOR SEASONAL SERIES, IT IS USUAL TO SEE A DIFFERENCE OF INTERVAL ONE AND INTERVAL s, WHERE s DENOTES THE NUMBER OF TIME INTERVALS BETWEEN RECURRING SEASONS.

MODEL SPECIFICATION; ESTIMATION OF PARAMETERS (METHOD OF MOMENTS, LEAST-SQUARES, MAXIMUM LIKELIHOOD, BAYESIAN); NONLINEAR ESTIMATION

AFTER IT HAS BEEN ESTABLISHED THAT THE DATA GENERATING PROCESS IS STATIONARY, OR DIFFERENCING HAS BEEN APPLIED TO TRANSFORM THE PROCESS TO A STATIONARY ONE, WORK MAY PROCEED ON MODEL SPECIFICATION AND ESTIMATION OF MODEL PARAMETERS.

THE FIRST STEP IS TO ESTIMATE VALUES OF THE STRUCTURAL PARAMETERS, p, d, q, s, P, D, Q. THIS IS DONE BY EXAMINING THE AUTOCORRELATION FUNCTION AND PARTIAL AUTOCORRELATION FUNCTION. THE VALUES FOR s, d AND d WILL HAVE BEEN DETERMINED DURING THE COURSE OF DECIDING WHETHER AND HOW DIFFERENCING SHOULD BE APPLIED TO TRANSFORM A NONSTATIONARY SERIES TO A STATIONARY ONE. WHAT REMAINS IS TO DETERMINE REASONABLE VALUES FOR p, q, P AND Q.

AS EXHIBITED IN THE FIGURES PRESENTED EARLIER, THE AUTOCORRELATION FUNCTION CUTS OFF FOR A PURE MOVING AVERAGE PROCESS, AND TAILS OFF FOR A PURE AUTOREGRESSIVE PROCESS. THE PARTIAL AUTOCORRELATION FUNCTION CUTS OFF FOR A PURE AUTOREGRESSIVE PROCESS AND TAILS OFF FOR A PURE MOVING AVERAGE PROCESS. FOR MIXED PROCESSES, THE BEHAVIOR IS MORE COMPLICATED. REFERENCE BJRL PRESENTS TABLES THAT MAY BE USED AS GUIDES TO INFER TENTATIVE VALUES FOR p, q, P AND Q (FOR ARBITRARY s).

NOTE THAT THE MODELS DESCRIBED HERE ARE NOT THE FULL RANGE OF MODELS THAT MAY BE CONSIDERED. SOME MODELS MAY CONTAIN MEANS AND TIME TRENDS. (A MEAN MAY BE REMOVED BY SINGLE DIFFERENCING, AND A TREND BY DOUBLE DIFFERENCING, BUT SUCH TRANSFORMATIONS ARE NOT RECOMMENDED FOR SERIES HAVING A CONSTANT MEAN OR LINEAR TREND, SINCE DIFFERENCING IN THIS CASE WILL INTRODUCE A UNIT ROOT INTO THE MODEL ERROR TERM, CAUSING THE MODEL TO BE NON-INVERTIBLE.)

FOR A SPECIFIED MODEL STRUCTURE (VALUES OF p, d, q, s, P, D, Q), THE MODEL PARAMETERS (PHIs AND THETAs) MAY BE ESTIMATED IN A NUMBER OF WAYS. THE STANDARD ESTIMATION PROCEDURES ARE THE FOLLOWING. NOTE THAT PRIOR TO ESTIMATION, ADDITIONAL TRANSFORMATIONS MAY BE APPLIED TO THE DATA (E.G., A LOGARITHMIC TRANSFORMATION, IF THE STANDARD DEVIATION OF THE OBSERVATIONS APPEARS TO VARY ACCORDING TO THE LEVEL OF THE SERIES, IN ORDER TO ACHIEVE A CONSTANT VARIANCE FOR THE MODEL RESIDUALS).

METHOD OF MOMENTS

FROM THE AVAILABLE SAMPLE DATA (TRANSFORMED TO STATIONARY), THE FIRST AND SECOND-ORDER SAMPLE MOMENTS (MEANS, VARIANCE, COVARIANCES) ARE CALCULATED. FOR AN ASSUMED MODEL STRUCTURE (VALUES OF p,q,s,P,Q), THE CORRESPONDING POPULATION MOMENTS ARE DETERMINED, ASSUMING AN UNDERLYING PROBABILITY DISTRIBUTION FOR THE MODEL ERROR TERM (SUCH AS NORMALITY). THE POPULATION MOMENTS ARE FUNCTIONS OF THE MODEL PARAMETERS (PHIs AND THETAs). THE POPULATION VALUES OF THE MOMENTS ARE SET EQUAL TO THE SAMPLE VALUES, AND THE EQUATIONS SOLVED FOR THE MODEL PARAMETERS.

METHOD OF LEAST SQUARES

THE PARAMETER ESTIMATES ARE THE VALUES THAT MINIMIZE THE RESIDUAL SUM OF SQUARES. THE COMPUTATIONAL PROCEDURES FOR MINIMIZING THE RESIDUAL SUM OF SQUARES IS SOMEWHAT COMPLICATED. IT IS DESCRIBED IN DETAIL IN THE TIMES TECHNICAL MANUAL AND IN THE BJRL BOOK.

THE METHOD OF LEAST SQUARES IS VERY BASIC, IN THAT IT IS A PROCEDURE THAT MAY BE IMPLEMENTED WITHOUT ANY CONSIDERATION OF AN UNDERLYING PROBABILITY DISTRIBUTION.

TO IMPLEMENT THE LEAST-SQUARES METHOD FOR A MODEL THAT INCLUDES MOVING-AVERAGE TERMS, VALUES MUST BE SPECIFIED FOR THE q MODEL ERROR TERMS PRECEDING THE START OF THE SAMPLE SERIES. THERE ARE TWO APPROACHES TO THE LEAST-SQUARES METHOD, CORRESPONDING TO HOW THIS IS DONE. FOR CONDITIONAL LEAST-SQUARES ESTIMATES, THE INITIAL VALUES OF THE MODEL ERROR TERMS ARE ASSUMED TO BE EQUAL TO ZERO. FOR UNCONDITIONAL LEAST-SQUARES ESTIMATES, THE INITIAL VALUES ARE ESTIMATED AS MODEL PARAMETERS. THE CONDITIONAL APPROACH IS USED BECAUSE IT IS SIMPLER TO IMPLEMENT (E.G., CLOSED-FORM SOLUTIONS MAY BE AVAILABLE FOR THE CONDITIONAL METHOD). FOR A LONG SAMPLE TIME SERIES, THE TWO METHODS PRODUCE SIMILAR RESULTS. THE DIFFERENCE IN THE METHODS IS MOST PRONOUNCED FOR MOVING-AVERAGE PARAMETER VALUES NEAR THE UNIT CIRCLE (WHERE THE EFFECT OF MODEL ERROR TERMS PERSISTS FOR A LONGER TIME, SO THAT THE EFFECT OF THE ASSUMED VALUES OF ZERO PERSISTS FOR A LONGER TIME).

MAXIMUM LIKELIHOOD

THE PARAMETER VALUES ARE THOSE THAT MAXIMIZE THE LIKELIHOOD, ASSUMING A NORMAL DISTRIBUTION (OR OTHER SUITABLE DISTRIBUTION) FOR THE MODEL ERROR TERMS.

THE ESTIMATES PRODUCED BY THE METHOD OF LEAST SQUARES PRODUCES THE SAME ESTIMATES AS THE METHOD OF MAXIMUM LIKELIHOOD FOR A NORMAL DISTRIBUTION.

AS IN THE CASE OF LEAST-SQUARES ESTIMATION, THE PARAMETER ESTIMATION MAY BE IMPLEMENTED CONDITIONAL ON SPECIFIED VALUES (ZEROS) FOR THE INITIAL VALUES OF THE MODEL ERROR TERMS, OR UNCONDITIONALLY.

BAYESIAN ESTIMATES

A PRIOR DISTRIBUTION IS SPECIFIED FOR THE MODEL PARAMETERS, AND A SAMPLING DISTRIBUTION GIVEN THE MODEL PARAMETERS. THE POSTERIOR DISTRIBUTION OF THE MODEL PARAMETERS IS DETERMINED, GIVEN THE OBSERVED SAMPLE (OF STATIONARY-TRANSFORMED DATA). THE PARAMETER ESTIMATES ARE THEIR EXPECTED VALUES, GIVEN THE POSTERIOR DISTRIBUTION.

THIS PRESENTATION WILL NOT DESCRIBE BAYESIAN ESTIMATION. REFERENCES ON THIS TOPIC INCLUDE:

GELMAN, ANDREW, JOHN B. CARLIN, HAL S. STERN, DAVID B. DUNSON, AKI VEHTARI AND DONALD B. RUBIN, BAYESIAN DATA ANAYSIS, 3RD ED., CRC PRESS, 2014

ROSSI, PETER E., GREG M. ALLENBY AND ROBERT MCCOLLUCH, BAYESIAN STATISTICS AND MARKETING, WILEY, 2005

BOX, GEORGE E. P. AND GEORGE C. TIAO, BAYESIAN INFERENCE IN STATISTICAL ANALYSIS, WILEY, 1973

CARLIN, BRADLEY P. AND THOMAS A. LOUIS, BAYES AND EMPIRICAL BAYES METHODS FOR DATA ANALYSIS, 2ND ED., CHAPMAN & HALL / CRC, 2000

LINEAR STATISTICAL MODELS VS. NONLINEAR STATISTICAL MODELS

IMPLEMENTATION OF THE PRECEDING METHODS OF ESTIMATION IS SOMEWHAT COMPLICATED, AND NUMERICAL METHODS ARE REQUIRED TO IMPLEMENT THEM IN MANY CASES. FOR PURE AUTOREGRESSIVE MODELS, THE MODELS ARE LINEAR STATISTICAL MODELS, AND THE ESTIMATION MAY PROCEED IN THE USUAL FASHION. FOR MODELS INVOLVING MOVING AVERAGE TERMS, THE MODELS ARE NOT LINEAR IN THE PARAMETERS, AND NUMERICAL METHODS MUST BE USED TO DETERMINE THE ESTIMATES.

FOR MODELS INVOLVING A SMALL NUMBER OF PARAMETERS (E.G., AN ARMA MODEL HAVING p + q < 2) THE SIMPLEST APPROACH TO PARAMETER ESTIMATION IS TO ESTIMATE THE LIKELIHOOD SURFACE FOR A RANGE (GRID) OF VALUES OF p AND q, AND SELECT THE VALUES CORRESPONDING TO THE MAXIMUM VALUE OF THE LIKELIHOOD SURFACE. THIS APPROACH IS NOT PRACTICAL FOR SITUATIONS INVOVLING A LARGE NUMBER OF PARAMETERS (SUCH AS MANY MULTIVARIATE APPLICATIONS).

TESTS OF MODEL ADEQUACY

THE PRIMARY GOAL IS TO OBTAIN A MODEL REPRESENTATION THAT REPRESENTS THE BASIC STOCHASTIC NATURE OF AN OBSERVED PROCESS WELL, IN AN EFFICIENT MANNER, I.E., WITH AS SMALL A NUMBER OF PARAMETERS AS IS REASONABLY POSSIBLE.

TESTS MAY BE APPLIED TO DETERMINE WHETHER φs AND θs AT SPECIFIED LAGS SHOULD BE RETAINED IN A PRELIMINARY MODEL. THE USUAL PROCEDURE IS TO TEST THE SIGNIFICANCE OF PARAMETERS FOR HIGHER LAGS FIRST.

A VERY IMPORTANT ASSUMPTION OF THE BOX-JENKINS MODELS IS THE ASSUMPTION THAT THE MODEL RESIDUALS (at’s) ARE UNCORRELATED, I.E., FORM A WHITE NOISE SEQUENCE. THE VALIDITY OF THIS ASSUMPTION MAY BE TESTED FROM THE AVAILABLE DATA. TESTS OF WHITENESS MAY INVOLVE EITHER THE AUTOCORRELATON FUNCTION OR THE SPECTRAL DENSITY FUNCTION. TESTS OF THIS ASSUMPTION INCLUDE THE GRENANDER-ROSENBLATT TEST, THE KOLMOGOROV-SMIRNOV TEST WITH THE LIILLIFORS CORRECTION, THE DURBIN-WATSON TEST, AND THE LJUNG-BOX TEST. FOR AN APPROPRIATE MODEL, THE MODEL RESIDUALS SHOULD APPEAR TO BE A WHITE NOISE PROCESS.

FOR LARGE n (THE SAMPLE

SIZE, AFTER DIFFERENCING TO ACHIEVE STATIONARITY) THE ESTIMATED

AUTOCORRELATIONS OF A WHITE NOISE SEQUENCE ARE APPROXIMATELY UNCORRELATED AND

NORMALLY DISTRIBUTED, WITH MEAN ZERO AND VARIANCE 1/n. IF, FOR A FITTED MODEL,

AN ESTIMATED AUTOCORRELATION EXCEEDS 1.96/![]() IN MAGNITUDE, THIS MAY BE VIEWED AS EVIDENCE THAT THE

MODEL RESIDUALS ARE NOT WHITE. UNFORTUNATELY, THE FACT THAT AN ESTIMATED

AUTOCORRELATION DOES NOT EXCEED THIS LEVEL MAY NOT BE VIEWED AS EVIDENCE

THAT THE AUTOCORRELATION IS ZERO. THE REASON FOR THIS IS THAT IF THE MODEL

RESIDUALS ARE NOT WHITE, THEN THE VARIANCE OF THE ESTIMATED AUTOCORRELATIONS

MAY BE VERY MUCH SMALLER THAN 1/n. FOR EXAMPLE, IN AN AUTOREGRESSIVE MODEL WITH

A SINGLE PARAMETER φ, THE VARIANCE OF THE LAG-ONE AUTOCORRELATION IS φ2/n. SO, IF φ IS AT ALL APPRECIABLE IN MAGNITUDE, THE VARIANCE OF

THE LAG-ONE AUTOCORRELATION IS SUBSTANTIALLY LESS THAN 1/n. IF THE VALUE 1.96/

IN MAGNITUDE, THIS MAY BE VIEWED AS EVIDENCE THAT THE

MODEL RESIDUALS ARE NOT WHITE. UNFORTUNATELY, THE FACT THAT AN ESTIMATED

AUTOCORRELATION DOES NOT EXCEED THIS LEVEL MAY NOT BE VIEWED AS EVIDENCE

THAT THE AUTOCORRELATION IS ZERO. THE REASON FOR THIS IS THAT IF THE MODEL

RESIDUALS ARE NOT WHITE, THEN THE VARIANCE OF THE ESTIMATED AUTOCORRELATIONS

MAY BE VERY MUCH SMALLER THAN 1/n. FOR EXAMPLE, IN AN AUTOREGRESSIVE MODEL WITH

A SINGLE PARAMETER φ, THE VARIANCE OF THE LAG-ONE AUTOCORRELATION IS φ2/n. SO, IF φ IS AT ALL APPRECIABLE IN MAGNITUDE, THE VARIANCE OF

THE LAG-ONE AUTOCORRELATION IS SUBSTANTIALLY LESS THAN 1/n. IF THE VALUE 1.96/![]() WERE USED TO DECIDE WHETHER THE LAG-ONE

AUTOCORRELATION WERE DIFFERENT FROM ZERO, THERE COULD (DEPENDING ON THE VALUE

OF φ)

BE A LARGE CHANGE OF WRONGLY DECIDING THAT THE TRUE LAG-ONE AUTOCORRELATION WAS

ZERO, WHEN IT WAS NOT.

WERE USED TO DECIDE WHETHER THE LAG-ONE

AUTOCORRELATION WERE DIFFERENT FROM ZERO, THERE COULD (DEPENDING ON THE VALUE

OF φ)

BE A LARGE CHANGE OF WRONGLY DECIDING THAT THE TRUE LAG-ONE AUTOCORRELATION WAS

ZERO, WHEN IT WAS NOT.

AN APPROXIMATE PORTMANTEAU TEST OF A FITTED ARIMA(p,d,q) MODEL WAS PROPOSED BY BOX AND PIERCE. IF THE FITTED MODEL IS APPROPRIATE, THEN THE STATISTIC

![]()

IS APPROXIMATELY

DISTRIBUTED AS χ2(K-p-q),

WHERE ![]() DENOTES THE k-th ESTIMATED AUTOCORRELATION AND n = N -d IS THE NUMBER OF OBSERVATIONS REMAINING AFTER

DIFFERENCING TO ACHIEVE STATIONARITY.

DENOTES THE k-th ESTIMATED AUTOCORRELATION AND n = N -d IS THE NUMBER OF OBSERVATIONS REMAINING AFTER

DIFFERENCING TO ACHIEVE STATIONARITY.

IN PRACTICE, IT WAS OBSERVED THAT FOR SAMPLES OF THE SIZE OFTEN ENCOUNTERED IN PRACTICE, THE VALUE OF THE Q STATISTIC TENDS TO BE SMALLER THAN χ2(K-p-q). LJUNG AND BOX PROPOSED A MODIFIED FORM OF THE TEST STATISTIC,

![]()

THIS STATISTIC HAS THE MEAN K – p -q OF THE χ2(K-p-q) DISTRIBUTION. IT IS A MORE SATISFACTORY STATISTIC BECAUSE THE VARIANCE OF rk(a) FOR A WHITE NOISE SERIES IS CLOSER TO (n – k))/(n(n + 2)) THE VALUE 1/n ASSUMED FOR THE BOX-PIERCE TEST.

THE STANDARD TEST FOR

WHITENESS, THEN, IS THE LJUNG-BOX TEST, WHICH TESTS WHETHER THE FIRST K

AUTOCORRELATIONS OF THE ERRORS FOR A FITTED MODEL ARE ZERO. SUPPOSE THAT THE

FITTED MODEL IS ARIMA(p,d,q). THE VALUE OF K IS CHOSEN SO THAT THE WEIGHTS ![]() OF THE MODEL,

WRITTEN IN THE FORM

OF THE MODEL,

WRITTEN IN THE FORM ![]() ARE SMALL AFTER LAG j = K. LET N DENOTE THE TOTAL NUMBER OF OBSERVATIONS AND n =

N – d DENOTE THE NUMBER OF OBSERVATIONS AFTER DIFFERENCING d TIMES TO ACHIEVE

STATIONARITY. DENOTE THE ESTIMATED MODEL RESIDUALS AS

ARE SMALL AFTER LAG j = K. LET N DENOTE THE TOTAL NUMBER OF OBSERVATIONS AND n =

N – d DENOTE THE NUMBER OF OBSERVATIONS AFTER DIFFERENCING d TIMES TO ACHIEVE

STATIONARITY. DENOTE THE ESTIMATED MODEL RESIDUALS AS ![]() AND DENOTE THE ESTIMATED AUTOCORRELATION OF THE

SEQUENCE OF ESTIMATED MODEL RESIDUALS AS

AND DENOTE THE ESTIMATED AUTOCORRELATION OF THE

SEQUENCE OF ESTIMATED MODEL RESIDUALS AS ![]() THE TEST STATISTISTIC IS

THE TEST STATISTISTIC IS

![]()

THIS TEST STATISTIC IS APPROXIMATELY DISTRIBUTED AS A χ2(K-p-q) VARIATE.

IF THE AVAILABLE DATA ARE LIMITED, TESTS OF MODEL ADEQUACY ARE PERFORMED USING ALL OF THE AVAILABLE DATA. IF SUFFICIENT DATA ARE AVAILABLE, A PREFERRED PROCEDURE IS TO ESTIMATE THE MODEL FROM ONE DATA SET AND ASSESS MODEL PERFORMANCE FROM A SEPARATE DATA SET.

MEASURES OF MODEL EFFICIENCY (INFORMATION CRITERIA): AIC, BIC, HQC

IN SPECIFYING A MODEL, A BETTER FIT (LOWER VARIANCE OF THE RESIDUALS) MAY BE ACHIEVED WITH MORE PARAMETERS, BUT THE MODEL MAY IN FACT EXHIBIT LOWER PERFORMANCE FOR PREDICTION (FORECASTING) OR CONTROL THAN A MODEL HAVING FEWER PARAMETERS. THAT IS, THERE IS A TRADE-OFF BETWEEN MODEL PRECISION (GOODNESS OF FIT) AND MODEL COMPLEXITY.

THREE STANDARD PROCEDURES ARE AVAILABLE FOR ASSISTING THE CHOICE OF A MODEL (FROM A SELECTION OF ALTERNATIVE MODELS THAT PASS TESTS OF MODEL ADEQUACY). THESE PROCEDURES FORM A MEASURE THAT INCLUDES A TERM REPRESENTING THE MAXIMIZED LIKELIHOOD AND A TERM REPRESENTING MODEL COMPLEXITY.

AKAIKE INFORMATION CRITERION (AIC)

![]()

WHERE r DENOTES THE NUMBER OF MODEL PARAMETERS (E.G., r = p + q FOR A NONSEASONAL ARMA MODEL WITHOUT A MEAN, OR r = p + q + 1 FOR A NONSEASONAL MODEL WITH A MEAN).

BAYESIAN (SCHWARZ) INFORMATION CRITERION (BIC)

![]()

HANNAN-QUINN CRITERION (HQC)

![]()

IF THE TRUE PROCESS IS AN ARMA(p,q) PROCESS, IT CAN BE SHOWN THAT THE BIC AND HQC ARE CONSISTENT IN THE SENSE THAT AS THE SAMPLE SIZE BECOMES VERY LARGE, THEY SELECT THE CORRECT MODEL (VALUES OF p AND q). THE AIC MAY SELECT A MODEL THAT IS SLIGHTLY MORE COMPLEX. IF THE TRUE PROCESS IS NOT A FINITE-ORDER ARMA PROCESS, THEN THE AIC HAS THE PROPERTY THAT AS THE SAMPLE SIZE BECOMES LARGE IT WILL SELECT, FROM A SET OF ARMA MODELS, THE ONE THAT IS CLOSEST TO THE TRUE PROCESS (WHERE CLOSENESS IS MEASURED BY THE KULLBACK-LEIBLER DIVERGENCE, A MEASURE OF DISPARITY BETWEEN MODELS).

IN THE PRECEDING FORMULAS FOR THE INFORMATION CRITERIA, THE FIRST TERM,

![]()

MAY BE APPROXIMATED BY

![]()

THE NATURAL LOGARITHM OF THE ESTIMATED VARIANCE OF THE RESIDUALS OF THE FITTED MODEL.

A DRAWBACK OF THE

INFORMATION CRITERIA IS THAT THEIR USE REQUIRES THE FITTING OF A POTENTIALLY LARGE

NUMBER OF ALTERNATIVE ARMA(p,q) MODELS, FOR ALTERNATIVE VALUES OF p AND q.

HANNAN AND RISSANEN DEVELOPED A METHOD FOR AVOIDING THIS PROBLEM. THEIR

APPROACH IS AS FOLLOWS. FIRST, ESTIMATE AN AR MODEL OF HIGH ORDER, AND USE THE

RESIDUALS OF THIS MODEL AS APPROXIMATIONS FOR THE RESIDUALS OF THE CORRECT

MODEL. THEN, REGRESS THE OBSERVED VALUE zt ON p PREVIOUS

OBSERVATIONS AND q APPROXIMATE RESIDUALS, FOR VARIOUS VALUES OF p AND q. DENOTE

THE ESTIMATED ERROR VARIANCE OF EACH OF THESE MODELS BY ![]() THEN, USING THE BIC, SELECT THE VALUES OF p AND q THAT

MINIMIZE

THEN, USING THE BIC, SELECT THE VALUES OF p AND q THAT

MINIMIZE

![]()

I.E., THE BIC. (FITTING THESE MODELS IS EASIER THAN FITTING ARMA(p,q) MODELS BECAUSE THEY ARE LINEAR STATISTICAL MODELS (REGRESSION MODELS), NOT NONLINEAR MODELS (AS ARE ARMA MODELS).) HANNAN AND RISSANEN SHOW THAT THE ESTIMATORS OF p AND q DETERMINED BY THIS METHOD CONVERGE ALMOST SURELY TO THE CORRECT VALUES.

GENERAL FORM OF AN ARIMA MODEL

THE GENERAL FORM OF A (NONSEASONAL) ARIMA MODEL IS

![]()

WHERE θ0 IS A CONSTANT,

![]()

![]()

AND

![]()

IN MODELS CONTAINING DIFFERENCE OPERATORS (FACTORS OF (1 – B)), θ0 IS USUALLY ZERO. THE MODEL ERROR TERMS, THE a’s, ARE REFERRED TO AS “SHOCKS.”

THE OPERATOR φ(B) IS CALLED THE AUTOREGRESSIVE OPERATOR. IT IS ASSUMED TO BE STATIONARY, I.E., TO HAVE ROOTS (SOLUTIONS TO φ(B) = 0) OUTSIDE THE UNIT CIRCLE.

THE OPERATOR ϕ(B) IS CALLED THE GENERALIZED AUTOREGRESSIVE OPERATOR. IT CONTAINS d ROOTS ON THE UNIT CIRCLE (SPECIFICALLY, ALL EQUAL TO ONE).

THE OPERATOR θ(B) IS CALLED THE MOVING AVERAGE OPERATOR. IT IS ASSUMED TO BE INVERTIBLE, I.E., TO HAVE ROOTS OUTSIDE THE UNIT CIRCLE.

IN THE FOLLOWING WE SHALL ASSUME THAT θ0 = 0.

ALTERNATIVE FORMS OF AN ARIMA MODEL

THREE DIFFERENT FORMS OF AN ARIMA MODEL ARE

1. THE DIFFERENCE EQUATION FORM, IN WHICH THE CURRENT VALUE OF THE OUTPUT, zt, IS EXPRESSED IN TERMS OF THE z’s AND CURRENT AND PREVIOUS VALUES OF THE a’s.

2. THE RANDOM-SHOCK FORM OF THE MODEL, IN WHICH THE CURRENT VALUE OF zt IS EXPRESSED IN TERMS OF CURRENT AND PREVIOUS a’s.

3. THE INVERTED FORM OF THE MODEL, IN WHICH THE CURRENT VALUE OF zt IS EXPRESSED IN TERMS OF A WEIGHTED SUM OF PREVIOUS z’s AND THE CURRENT a (I.E., at).

DEPENDING ON WHAT IS BEING DISCUSSED, ONE FORM IS MORE USEFUL THAN THE OTHERS.

THESE THREE FORMS OF AN ARIMA MODEL ARE NOW DESCRIBED IN FURTHER DETAIL.

DIFFERENCE-EQUATION FORM OF THE MODEL

THE GENERAL FORM OF THE MODEL IS

![]()

FOR THE DIFFERENCE-EQUATION FORM WE SIMPLY EXPAND THE ϕ AND θ POLYNOMIALS AND TRANSFER ALL BUT THE CURRENT zt TO THE RIGHT-HAND-SIDE OF THE MODEL EQUATION. THAT IS:

![]()

OR

![]()

OR

![]()

THE GENERAL FORM OF THE MODEL IS USED TO COMPACTLY DESCRIBE THE MODEL, AND TO SUCCINCTLY COMPARE ONE MODEL TO ANOTHER.

RANDOM-SHOCK FORM OF THE MODEL

IT WAS DISCUSSED EARLIER THAT A STATIONARY STOCHASTIC PROCESS MAY BE EXPRESSED AS AN INFINITE SERIES (THE WOLD DECOMPOSITION):

![]()

WHERE

![]()

SINCE A GENERAL ARIMA MODEL IS NOT STATIONARY, HOWEVER, THE WOLD THEOREM DOES NOT APPLY, AND IT CANNOT BE ASSERTED ON THAT BASIS THAT AN INFINITE-SERIES REPRESENTATION EXISTS. IN FACT, FOR NONSTATIONARY PROCESSES, THIS IS NOT POSSIBLE. IT IS POSSIBLE, HOWEVER, TO EXPRESS AN ARIMA MODEL IN A TRUNCATED (FINITE-SERIES) FORM, WHICH IS USEFUL FOR UPDATING FORECASTS AND CALCULATING FORECAST VARIANCES.

THIS REPRESENTATION IS AS FOLLOWS:

![]()

WHERE Ck(t-k) IS THE COMPLEMENTARY FUNCTION, OR GENERAL SOLUTION OF THE DIFFERENCE EQUATION

![]()

IT CAN BE SHOWN THAT THIS REPRESENTATION IS EQUAL TO:

![]()

WHERE Ek[zt] DENOTES THE CONDITIONAL EXPECTATION OF zt AT TIME k. SEE BJRL pp. 97-105 FOR DISCUSSION.

THE Ψ WEIGHTS ARE OBTAINED BY EQUATING COEFFICIENTS OF B IN THE EXPRESSION

![]()

THAT IS, RECURSIVELY FROM THE EXPRESSION

![]()

WHERE Ψ0 = 1, Ψj = 0 FOR j < 0 and θj = 0 FOR j > q.

IT CAN BE SHOWN THAT

![]()

THIS EXPRESSION SHOWS HOW TO UPDATE A FORECAST FROM ONE POINT IN TIME (I.E., t) TO THE NEXT.

INVERTED FORM OF THE MODEL

IN THE GENERAL FORM OF THE MODEL,

![]()

THE POLYNOMIAL θ(B) HAS ROOTS OUTSIDE THE UNIT CIRCLE, I.E., THE PROCESS IS INVERTIBLE, AND MAY BE EXPRESSED AS

![]()

THE π WEIGHTS ARE DETERMINED IN THE SAME WAY AS THE Ψ WEIGHTS WERE, ABOVE.

WE WRITE

![]()

AND OBTAIN THE π WEIGHTS BY EQUATING COEFFICIENTS OF B IN THE EXPRESSION

![]()

THIS YIELDS

![]()

HENCE THE π WEIGHTS MAY BE DETERMINED RECURSIVELY FROM

![]()

WHERE π0 = -1, πj = 0 FOR j < 0 and ϕj = 0 FOR j > p+d.

THE INVERTED FORM OF THE MODEL CAN BE WRITTEN AS

![]()

SINCE THE SERIES Σπj IS CONVERGENT, THE WEIGHTS πj DIE OUT, SO THAT THE CURRENT VALUE OF THE TIME SERIES DEPENDS MAINLY ON VALUES OF πj IN THE RECENT PAST. THIS IS IN CONTRAST TO THE RANDOM-SHOCK MODEL,

![]()

WHERE THE WEIGHTS DO NOT

DIE OUT FOR NONSTATIONARY MODELS (I.E., THE TERM ![]() DOES NOT DECREASE TO ZERO AS k INCREASES).

DOES NOT DECREASE TO ZERO AS k INCREASES).

FORECASTING

ONCE A MODEL PASSES THE VARIOUS TESTS OF MODEL ADEQUACY, IT MAY BE USED AS A BASIS FOR FORECASTING, I.E., PREDICTING THE FUTURE VALUE OF THE PROCESS, GIVEN AN OBSERVED SEQUENCE OF OBSERVATIONS.

THE OBJECTIVE IS TO ESTIMATE THE VALUE OF

![]()

FOR INTEGER ![]() WHERE WE HAVE

OBSERVATIONS

WHERE WE HAVE

OBSERVATIONS

![]()

THE TIME t IS CALLED THE ORIGIN

OF THE FORECAST, AND THE VALUE OF ![]() ISCALLED THE LEAD TIME OF THE FORECAST. THIS

FORECAST WILL BE DENOTED BY

ISCALLED THE LEAD TIME OF THE FORECAST. THIS

FORECAST WILL BE DENOTED BY

![]()

THE STANDARD APPROACH TO FORECASTING IS TO DETERMINE THE FORECAST THAT HAS MINIMUM MEAN SQUARED ERROR OF PREDICTION, I.E. THE ONE FOR WHICH

![]()

IS MINIMIZED. IT CAN BE

SHOWN THAT THIS FORECAST IS THE EXPECTED VALUE OF ![]() CONDITIONAL ON THE OBSERVATIONS

CONDITIONAL ON THE OBSERVATIONS ![]() :

:

![]()

TO CALCULATE THE FORECAST, THIS EXPECTED VALUE MUST BE DETERMINED. WHILE THIS PRESENTATION DOES NOT GENERALLY PRESENT MATHEMATICAL PROOFS, THE DERIVATION OF AN EXPRESSION FOR THIS EXPECTED VALUE IS STRAIGHTFORWARD IN THE CASE IN WHICH THE MODEL IS STATIONARY, AND WILL BE PRESENTED.

IN THE STATIONARY CASE, WE MAY WRITE

![]()

THE OBJECTIVE IS TO

CONSTRUCT A FORECAST ![]() OF

OF ![]() WHICH IS A LINEAR COMBINATION OF CURRENT AND PREVIOUS

OBSERVATIONS, zt, zt-1, zt-2,…, OR,

EQUIVALENTLY, OF CURRENT AND PREVIOUS SHOCKS, at, at-1, at-2….

WHICH IS A LINEAR COMBINATION OF CURRENT AND PREVIOUS

OBSERVATIONS, zt, zt-1, zt-2,…, OR,

EQUIVALENTLY, OF CURRENT AND PREVIOUS SHOCKS, at, at-1, at-2….

LET US DENOTE THE FORECAST AS

![]()

WHERE THE WEIGHTS Ψj* ARE TO BE DETERMINED TO MINIMIZE THE MEAN- SQUARED ERROR OF PREDICTION (OR MEAN SQUARED FORECAST ERROR). THE MEAN-SQUARED FORECAST ERROR IS

![]()

WHICH IS MINIMIZED BY

SETTING ![]() .

.

IT THEN FOLLOWS THAT

![]()

WHERE

![]()

IS THE ERROR OF THE

FORECAST ![]() AT LEAD TIME

AT LEAD TIME ![]() .

.

THE EXPECTED VALUE OF ![]() IS ZERO (SINCE THE EXPECTED VALUE OF EACH OF THE a’s

IS ZERO). THE VARIANCE OF

IS ZERO (SINCE THE EXPECTED VALUE OF EACH OF THE a’s

IS ZERO). THE VARIANCE OF ![]() IS

IS

![]()

ASSUMING THAT THE at ARE INDEPENDENT, WE HAVE (IN THE STATIONARY CASE)

![]()

SO

![]()

THAT IS, THE MINIMUM (LINEAR)

MEAN-SQUARED-ERROR FORECAST AT ORIGIN t FOR LEAD TIME ![]() IS THE CONDITIONAL EXPECTATION OF

IS THE CONDITIONAL EXPECTATION OF ![]() AT TIME t. CONSIDERED AS A FUNCTION OF

AT TIME t. CONSIDERED AS A FUNCTION OF ![]() FOR FIXED T,

FOR FIXED T, ![]() IS CALLED THE FORECAST FUNCTION FOR ORIGIN t.

IS CALLED THE FORECAST FUNCTION FOR ORIGIN t.

NOTE THAT THE PRECEDING PROOF ASSUMED STATIONARITY. THE GENERAL FORM OF THE ARIMA MODEL IS NONSTATIONARY, AND DERIVATION OF THE FORECAST FUNCTION IN THE GENERAL (NONSTATIONARY) CASE IS A LITTLE DIFFERENT.

CALCULATION OF THE FORECAST MAY BE DONE IN THREE DIFFERENT WAYS, CORRESPONDING TO THE THREE DIFFERENT REPRESENTATIONS OF THE MODEL. IN THIS PRESENTATION, WE SHALL DISCUSS FORECASTING USING THE DIFFERENCE-EQUATION REPRESENTATION. SEE CHAPTER 5 (pp. 129 – 176) OF BJRL FOR DETAILED DISCUSSION.

FORECASTING USING THE DIFFERENCE-EQUATION FORM

THE FORMULA FOR CALCULATING FORECASTS FROM THE DIFFERENCE-EQUATION FORM OF AN ARIMA MODEL IS

![]()

WHERE ![]() DENOTES THE OBSERVED VALUE zt-j FOR j

>= 0 AND THE MOVING AVERAGE TERMS ARE NOT PRESENT FOR

DENOTES THE OBSERVED VALUE zt-j FOR j

>= 0 AND THE MOVING AVERAGE TERMS ARE NOT PRESENT FOR ![]() > q. THE VALUE OF THE MOVING AVERAGE TERM (MODEL

ERROR TERM) at IS ESTIMATED AS

> q. THE VALUE OF THE MOVING AVERAGE TERM (MODEL

ERROR TERM) at IS ESTIMATED AS

![]()

FORECASTING USING A DIFFERENCE EQUATION – ADDITIONAL DETAILS

THIS SUBSECTION PRESENTS SOME ADDITIONAL DISCUSSION OF THE PROCEDURE FOR CALCULATING A FORECAST FROM THE DIFFERENCE-EQUATION REPRESENTATION. THE ISSUE THAT IS ADDRESSED IS TO USE THE PRECEDING FORMULA, VALUES OF THE a’s MUST BE AVAILABLE FOR q TIMES PREVIOUS TO THE FORECAST ORIGIN. THE EASIEST WAY TO ESTIMATE THESE a’s IS TO COMPUTE FORECASTS FROM THE BEGINNING OF THE AVAILABLE SERIES, EVEN THOUGH FORECASTS ARE USUALLY DESIRED ONLY FROM THE LAST AVAILABLE OBSERVATION. THIS IS DONE AS FOLLOWS.

THE GENERAL ARIMA MODEL MAY BE EXPRESSED IN THE FORM

![]()

OR

![]()

OR

![]()

THIS FORM PROVIDES THE BASIS FOR AN ITERATIVE METHOD FOR CALCULATING THE FORECAST. RECALL THAT IN AN ACCEPTABLE ARIMA MODEL, THE θ POLYNOMIAL IS INVERTIBLE, I.E., ITS ROOTS ARE OUTSIDE THE UNIT CIRCLE. THIS IMPLIES THAT THE INFLUENCE OF THE MODEL ERROR TERMS (OR “SHOCKS”) ON FUTURE VALUES OF THE PROCESS DIMINISHES IN TIME.

TO IMPLEMENT THIS METHOD, FORECASTING IS DONE FROM TIME ORIGIN p + d:

![]()

FOR THIS FIRST FORECAST, THE ERROR TERMS ap+1, ap,…,ap-q+1 ARE ESTIMATED BY THEIR EXPECTED VALUES, ZEROS. THIS YIELDS

![]()

THE VALUE OF ap+1

IS ESTIMATED AS THE DIFFERENCE BETWEEN THE FORECAST VALUE ![]() AND THE TRUE (OBSERVED) VALUE zp+d+1:

AND THE TRUE (OBSERVED) VALUE zp+d+1:

![]()

THE SAME PROCEDURE IS

APPLIED TO CONSTRUCT ![]() , BUT NOW USING THE ESTIMATED VALUE

, BUT NOW USING THE ESTIMATED VALUE ![]() FOR ap+1. THE ERROR TERM ap+2

IS ESTIMATED AS

FOR ap+1. THE ERROR TERM ap+2

IS ESTIMATED AS

![]()

AFTER MAKING q FORECASTS IN THIS WAY, ESTIMATED VALUES ARE AVAILABLE FOR ALL ats REQUIRED BY THE MODEL EQUATION.

THIS FORECAST PROCESS IS CONTINUED UP TO THE PRESENT TIME, t. FOR MAKING FORECASTS OF zt BEYOND TIME t, THE VALUES OF at (at+1, at+2,…) ARE UNKNOWN, AND THEIR EXPECTED VALUES, ZEROS, ARE USED.

SINCE THE PROCESS IS ASSUMED INVERTIBLE, THE EFFECT OF ASSUMING ZEROS FOR THE INITIAL VALUES OF THE ats DIES OUT AS THE ITERATIVE FORECASTING PROCEDURE CONTINUES.

UPDATING FORECASTS USING Ψ WEIGHTS

ONCE AN INITIAL FORECAST HAS BEEN DETERMINED, THERE IS AN EASY WAY TO CALCULATE FUTURE FORECASTS FROM IT, AS NEW OBSERVATIONS BECOME AVAILABLE. THIS FORMULA IS:

![]()

WHERE

![]()

FORECAST ERROR VARIANCE AND PROBABILITY LIMITS

THE FORECAST ERROR VARIANCES ARE DETERMINED FROM FORMULAS THAT INVOLVE THE Ψ WEIGHTS. THE RECURSIVE FORMULA FOR DETERMINING THOSE WEIGHTS WAS PRESENTED ABOVE (IN THE SECTION DEALING WITH THE THREE ALTERNATIVE FORMS OF AN ARIMA MODEL).

THE VARIANCE OF THE FORECAST ERROR WAS GIVEN ABOVE, IN TERMS OF THE Ψ WEIGHTS, AS

![]()

IF THE MODEL ERROR TERMS ARE APPROXIMATELY NORMALLY DISTRIBUTED, 95% PROBABILITY LIMITS ARE EQUAL TO THE FORECAST ESTIMATE PLUS AND MINUS 1.96 TIMES THE SQUARE ROOT OF THE VARIANCE.

THE PRECEDING IS A METHOD OF MAKING FORECASTS ASSUMING THAT THE TRUE MODEL IS KNOWN. IN PRACTICE, THE MODEL IS NOT KNOWN, AND ESTIMATED VALUES ARE USED FOR THE MODEL PARAMETERS. IT CAN BE SHOWN THAT FORECASTS BASED ON ESTIMATED PARAMETER VALUES ARE UNBIASED.

THE FORMULA PRESENTED ABOVE FOR THE FORECAST ERROR VARIANCE ASSUMED THAT THE PARAMETER VALUES WERE KNOWN, NOT ESTIMATED. FOR MODELS ESTIMATED FROM LARGE SAMPLES, THESE FORMULAS MAY BE USED. FOR MODELS ESTIMATED FROM SMALL SAMPLES, MODIFIED FORMULAS THAT TAKE INTO ACCOUNT THE ERROR ASSOCIATED WITH PREDICTION OF THE MODEL PARAMETERS ARE AVAILABLE AND SHOULD BE USED (E.G., BOOTSTRAPPING).

IMPULSE RESPONSE FUNCTION

ABOVE, EXPRESSIONS WERE PRESENTED FOR THE IMPULSE RESPONSE FUNCTION FOR A SPECIFIED (TRUE) MODEL, IN THE CASE OF A STATIONARY STOCHASTIC PROCESS. WHEN THE TRUE MODEL IS NOT KNOWN AND THE MODEL PARAMETERS MUST BE ESTIMATED FROM DATA, THE IMPULSE RESPONSE FUNCTION IS ESTIMATED BY SUBSTITUTING ESTIMATED PARAMETER VALUES IN THE FORMULAS FOR THE TRUE MODEL. THIS PROCEDURE PRODUCES CONSISTENT ESTIMATES OF THE IMPULSE RESPONSE FUNCTION.

FOR A HOMOGENEOUS NONSTATIONARY PROCESS, AS MENTIONED, IT IS NOT POSSIBLE TO EXPRESS THE IMPULSE RESPONSE FUNCTION AS AN INFINITE SERIES. IN THIS CASE, A FINITE NUMBER OF THE Ψ WEIGHTS MAY BE CALCULATED, AS DESCRIBED IN THE SECTION THAT DESCRIBED REPRESENTING AN ARIMIA PROCESS IN TERMS OF RANDOM SHOCKS.

AS MENTIONED EARLIER, THE IMPULSE RESPONSE FUNCTION INDICATES THE AVERAGE CHANGE IN THE OUTPUT (zt) CORRESPONDING TO A UNIT CHANGE IN THE INPUT (at), FOR at GENERATED ACCORDING TO A CAUSAL MODEL. IF THE MODEL IS ESTIMATED FROM DATA IN WHICH THE at ARE SIMPLY OBSERVED, NOT CONTROLLED (FORCED), THEN THE IRF IS AN ESTIMATE OF THE CHANGE TO BE EXPECTED IN THE OUTPUT CORRESPONDING TO AN OBSERVED UNIT CHANGE IN THE INPUT. IF THE MODEL IS ESTIMATED FROM EXPERIMENTAL-DESIGN DATA IN WHICH RANDOMIZED FORCED CHANGES ARE MADE IN THE INPUT, THEN THE IRF IS AN ESTIMATE OF THE CHANGE TO BE EXPECTED IN THE OUTPUT IF FORCED CHANGES ARE MADE AS UNDER THE EXPERIMENTAL CONDITONS FOR WHICH THE DATA USED TO ESTIMATE THE MODEL WERE COLLECTED.

THE IMPULSE RESPONSE FUNCTION IS OF GREATER INTEREST FOR MODELS THAT CONTAIN COVARIATES (VARIABLES OF THE MODEL OTHER THAN MODEL ERROR TERMS) THAN FOR MODELS THAT DO NOT. THAT IS, IT IS OF GREATER INTEREST TO KNOW THE RESPONSE OF A CHANGE IN AN EXPLANATORY VARIABLE THAN IN A MODEL ERROR (“SHOCK”).

ALTERNATIVE REPRESENTATIONS: UNIQUENESS OF MODEL, JOINT PDF, ACF (NORMAL)

UNDER THE ASSUMPTION OF INVERTIBILITY, IF A PARTICULAR AUTOCORRELATION FUNCTION CORRESPONDS TO AN ARMA MODEL, THEN THAT MODEL IS UNIQUE (AMONG ALL ARMA MODELS).

MORE SPECIFICALLY, A STATIONARY INVERTIBLE ARIMA STOCHASTIC PROCESS IS UNIQUELY DEFINED BY THE JOINT PROBABILITY DISTRIBUTION FUNCTION OF A SEQUENCE OF OBSERVED VALUES (zt, zt+1, …, zt+k) OR BY THE ARIMA MODEL SPECIFICATION. IF THE PROCESS OBEYS A NORMAL DISTRIBUTION, THEN IT IS CHARACTERIZED (UNIQUELY DEFINED) BY THE MEAN, VARIANCE, AND AUTOCOVARIANCE FUNCTION.

ALTERNATIVE REPRESENTATIONS: STATE SPACE, KALMAN FILTER

THE PRECEDING MATERIAL DESCRIBES THE BASIC THEORY OF UNIVARIATE TIME SERIES ANALYSIS, USING ARIMA STOCHASTIC PROCESS MODELS. AN ARIMA MODEL IS USED TO REPRESENT THE THEORETICAL (TRUE, POPULATION) STOCHASTIC PROCESS THAT GENERATES THE DATA, AND FORECASTS ARE CONSTRUCTED DIRECTLY FROM THE ESTIMATED ARIMA MODEL.

AN ALTERNATIVE METHODOLOGY FOR CONSTRUCTING TIME SERIES MODELS AND GENERATING FORECASTS IS BASED ON THE USE OF STATE SPACE REPRESENTATIONS OF TIME SERIES. THE STATE SPACE METHODOLOGY IS MORE GENERAL THAN THE METHODOLOGY PRESENTED ABOVE, IN THAT IT ALLOWS FOR MEASUREMENT ERRORS (THE PRECEDING DISCUSSION ASSUMES THAT THE PROCESS VALUE, zt, IS KNOWN EXACTLY, WITHOUT MEASUREMENT ERROR), AND ALLOWS FOR TIME-VARYING PARAMETERS (IN THE PRECEDING DISCUSSION, THE MODEL PARAMETERS ARE FIXED).

THE OPTIMAL FORECASTER FOR A STATE SPACE REPRESENTATION IS CALLED THE KALMAN FILTER.

THE STATE SPACE METHODOLOGY MAY BE APPLIED EITHER TO UNIVARIATE OR MULTIVARIATE TIME SERIES. THE FORMULAS INVOLVED ARE IDENTICAL IN THE UNIVARIATE AND MULTIVARIATE CASES. TO AVOID REDUNDANCY, DISCUSSION OF STATE SPACE FORECASTING AND THE KALMAN FILER IS DEFERRED UNTIL THE DISCUSSION OF MULTIVARIATE TIME SERIES MODELS.

EXTENSIONS: ARCH, GARCH

IN THE PRECEDING DISCUSSION, THE (STATIONARITY) ASSUMPTION WAS MADE THAT THE ERROR VARIANCE OF THE STOCHASTIC PROCESS IS CONSTANT (OVER TIME). IN SOME IMPORTANT APPLICATIONS, THIS ASSUMPTION IS UNTENABLE. FOR EXAMPLE, THE VARIABILITY OF STOCK AND COMMODITY PRICES IS KNOWN TO FLUCTUATE OVER TIME.

A STOCHASTIC PROCESS FOR WHICH THE ERROR VARIANCE IS CONSTANT IS CALLED A HOMOSCEDASTIC (OR HOMOSKEDASTIC) PROCESS. A STOCHASTIC PROCESS FOR WHICH THE ERROR VARIANCE IS NOT CONSTANT IS CALLED A HETEROSCEDASTIC (OR HETEROSKEDASTIC) PROCESS.

HETEROSCEDASTICITY IS A FORM OF NONSTATIONARY BEHAVIOR (AS IS THE HOMOGENEOUS NONSTATONARY BEHAVIOR ASSOCIATED WITH ROOTS OF THE AR POLYNOMIAL BEING LOCATED ON THE UNIT CIRCLE).

STANDARD MODELS HAVE BEEN DEVELOPED TO REPRESENT HETEROSCEDASTIC BEHAVIOR. THESE MODELS ARE CALLED AUTOREGRESSIVE CONDITIONAL HETEROSCEDASTICITY (ARCH) MODELS. IN SUCH MODELS, THE VARIANCE OF THE CURRENT ERROR TERM IS A FUNCTION OF THE SIZES OF THE ERROR TERMS FOR PREVIOUS TIME PERIODS.

FOR AN ARCH MODEL, THE ERROR VARIANCE OBEYS AN AUTOREGRESSIVE (AR) MODEL. IF THE ERROR VARIANCE OBEYS AN AUTOREGRESSIVE MOVING AVERAGE MODEL (ARMA), THE MODELS IS CALLED A GENERALIZED AUTOREGRESSIVE CONDITIONAL HETEROSCEDASTICITY (GARCH) MODEL.

THE ARCH AND GARCH MODELS WILL NOT BE DESCRIBED HERE. DETAILED DESCRIPTIONS OF THESE MODELS ARE PRESENTED IN THE REFERENCES. THE BASIC APPROACH TO DEVELOPMENT OF ARCH AND GARCH MODELS IS THE SAME AS FOR THE HOMOSCEDASTIC MODELS DESCRIBED ABOVE. ALL THAT CHANGES IS THAT THE MODELS CONTAIN SOME ADDITIONAL PARAMETERS THAT DESCRIBE HOW THE VARIANCE CHANGES (E.G., USING AN ARMA MODEL TO REPRESENT THE VARIANCE).

DETAILED EXAMPLE OF THE DEVELOPMENT OF A SINGLE-VARIABLE UNIVARIATE TIME SERIES MODEL

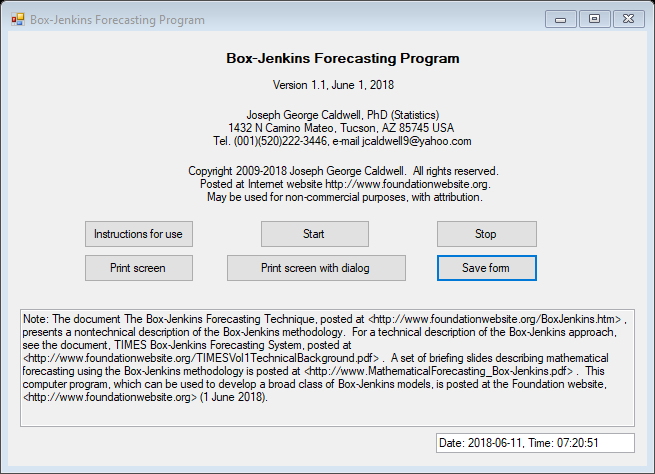

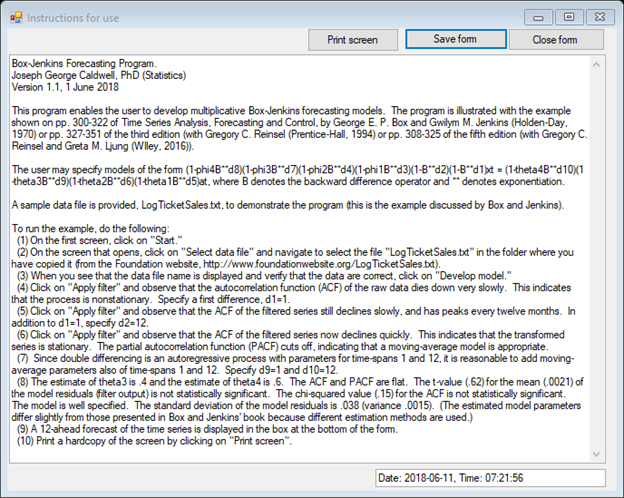

IN THEIR ORIGINAL WORK, BOX AND JENKINS PRESENTED AN EXAMPLE IN WHICH AN ARIMA MODEL WAS DEVELOPED FOR A TIME SERIES OF MONTHLY AIRLINE TICKET SALES. THIS EXAMPLE HAS BEEN PRESENTED IN MANY TEXTS, INCLUDING BJRL (pp. 310-325), CRYER (pp. 240 – 244) AND STATA.

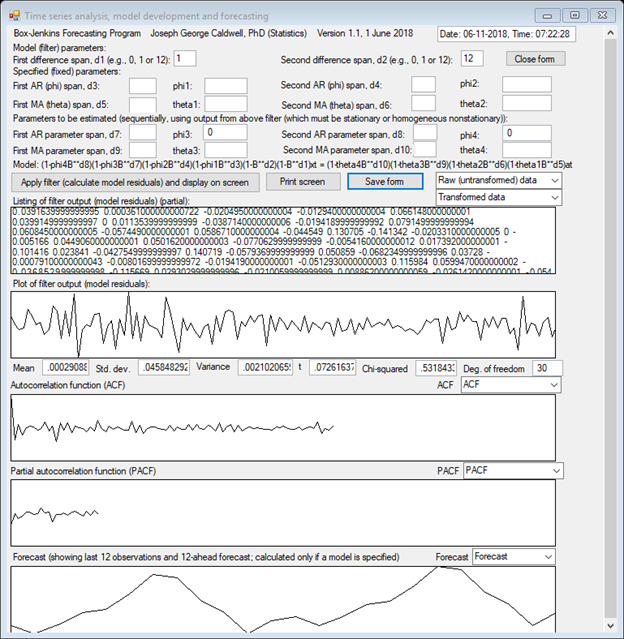

THIS EXAMPLE WILL NOW BE DESCRIBED IN DETAIL. NUMERICAL COMPUTATIONS ASSOCIATED WITH THIS ANALYSIS WILL SHOWN USING THE FREE BOX-JENKINS PROGRAM POSTED AT INTERNET WEBSITE http://www.foundationwebsite.org . THAT PROGRAM ESTIMATES SINGLE-VARIABLE BOX-JENKINS MODELS (SEASONAL OR NONSEASONAL) CONTAINING UP TO TWO PHI PARAMETERS AND TWO THETA PARAMETERS. IT PRODUCES FORECASTS, BUT NOT FORECAST ERROR VARIANCES. IT USES THE “CONDITIONAL” ESTIMATION PROCEDURE, IN WHICH THE VALUES OF MODEL RESIDUALS PRIOR TO THE OBSERVED TIME SERIES ARE REPLACED BY ZEROS. THESE ESTIMATES ARE SLIGHTLY DIFFERENT FROM THOSE PRODUCED USING UNCONDITIONAL ESTIMATION (AS IN STATA).

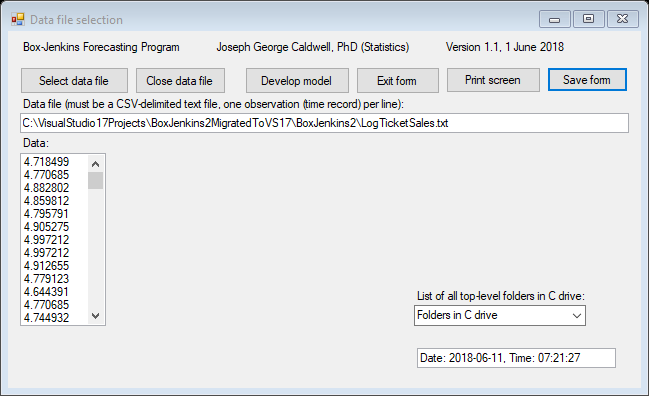

THE DATA ARE LOGARITHMS OF INTERNATIONAL AIRLINE TICKET SALES FROM JANUARY 1949 THROUGH DECEMBER 1960, A TOTAL OF n = 144 OBSERVATIONS.

PROGRAM OUTPUT IS SHOWN, ON THE PAGES THAT FOLLOW, FOR THE FOLLOWING CASES:

1. RAW DATA (ALREADY TRANSFORMED TO LOGARITHMS)

2. DIFFERENCING OF SPAN 1

3. DIFFERENCING OF SPAN 12

4. DIFFERENCING OF SPANS 1 AND 12

5. DIFFERENCING OF SPANS 1 AND 12, AND ESTIMATION OF θ1 AND θ12.

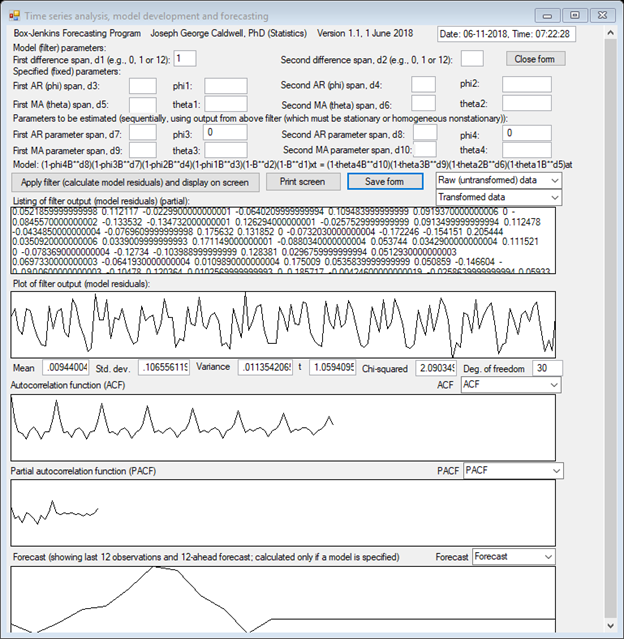

THE PLOT OF THE TIME SERIES CLEARLY SHOWS A NONSTATIONARY TIME SERIES WITH A SEASONAL COMPONENT OF s = 12 MONTHS.

THE AUTOCORRELATION FUNCTION

DECLINES VERY SLOWLY, AND DOES NOT EXHIBIT CYCLICAL BEHAVIOR. THE ACF SUGGESTS

HOMOGENEOUS NONSTATIONARY BEHAVIOR, SO SIMPLE DIFFERENCING IS APPLIED TO OBTAIN

A STATIONARY TIME SERIES. PLOTS ARE SHOWN OF THE ACFs OF TRANSFORMED DATA

APPLYING SINGLE DIFFERENCES OF TIME SPANS 1 AND 12 AND A DOUBLE DIFFERENCE OF

SPANS 1 AND 12. THAT IS, IF THE ORIGINAL (UNTRANSFORMED) DATA ARE DENOTED AS zt,

THEN THE TRANSFORMED SERIES ARE ![]() ,

, ![]() and

and ![]() .

.

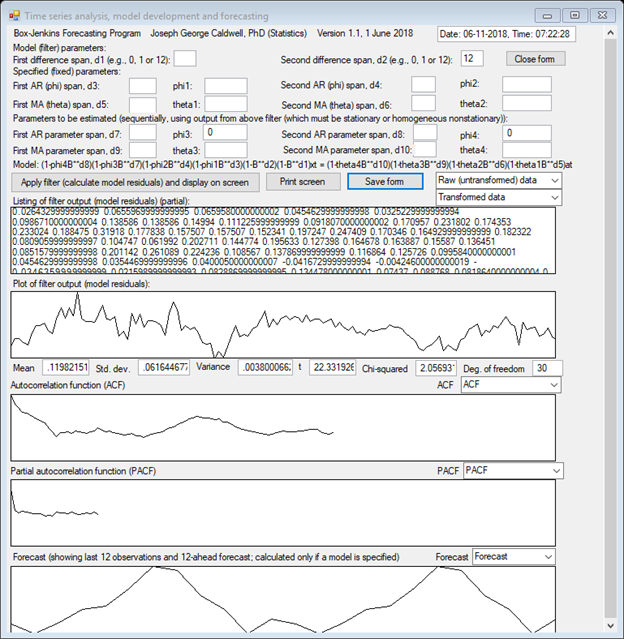

THE SINGLE-DIFFERENCED SERIES ARE NONSTATIONARY, BUT THE DOUBLE-DIFFERENCED SERIES IS STATIONARY.

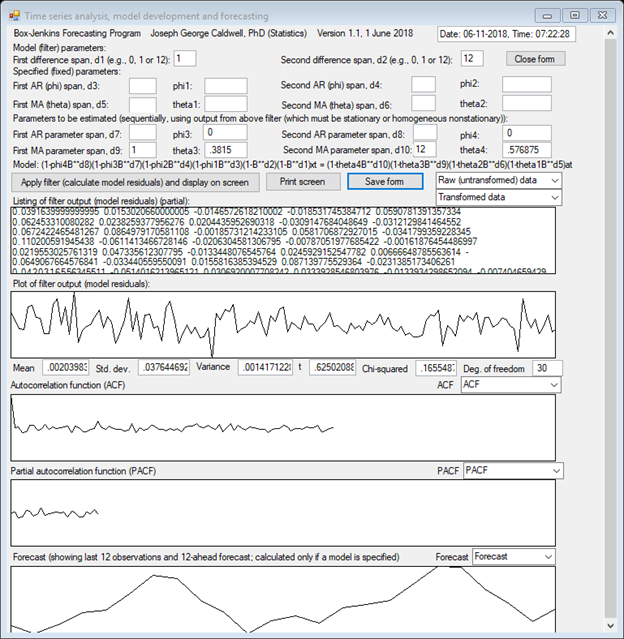

FOR A MODEL INCLUDING A SPAN-1 AND SPAN-12 DIFFERENCING, IT IS ANTICIPATED THAT A REASONABLE MODEL CHOICE MIGHT BE OF ORDER (0,1,1) X (0,1,1)12, THAT IS, A MODEL OF THE FORM

![]()

(SINCE THERE ARE BUT TWO THETA PARAMETERS FOR EACH TIME SPAN, WE SHALL USE THE SIMPLER NOTATION θ = θ12 AND Θ = θ12 IN THE FORMULAS THAT FOLLOW.)

FOR THIS MODEL, THE AUTOCOVARIANCES OF wt ARE:

![]()

![]()

![]()

![]()

![]()

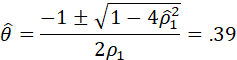

THE EXPRESSIONS FOR γ1 AND γ12 IMPLY

![]()

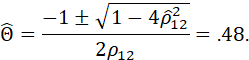

AND

![]()

FROM THE SAMPLE DATA,

ESTIMATED VALUES OF ρ1 AND ρ12

ARE OBTAINED AS ![]() AND

AND ![]() .

.

SUBSTITUTING THESE VALUES IN THE PRECEDING AND SOLVING FOR θ AND Θ YIELDS THE METHOD-OF-MOMENTS ESTIMATES FOR θ AND Θ.

THE VALUES OF θ AND Θ ARE OBTAINED FROM THE QUADRATIC FORMULA:

AND

(NOTE THAT THE SOLUTION IS NOT UNIQUE; THE SOLUTION IS TAKEN THAT CORRESPONDS TO ROOTS OF (1 – θB) AND (1 – ΘB) BEING OUTSIDE THE UNIT CIRCLE, I.E., VALUES OF θ AND Θ LESS THAN ONE IN MAGNITUDE.)

THE PRECEDING VALUES –

METHOD-OF-MOMENT ESTIMATES – ARE CONSIDERED “ROUGH” INITIAL VALUES. IMPROVED

VALUES MAY BE OBTAINED BY THE METHOD OF LEAST SQUARES OR, EQUIVALENTLY, BY

MAXIMIZING THE LIKELIHOOD ASSUMING A NORMAL DISTRIBUTION FOR THE MODEL ERROR

TERMS. APPLYING THAT METHOD YIELDS THE VALUES ![]() AND

AND ![]() (THESE VALUES VARY SLIGHTLY FROM THOSE SHOWN IN BJRL,

AND IN STATA BECAUSE SLIGHTLY DIFFERENT NUMERICAL ESTIMATION PROCEDURES ARE

USED.)

(THESE VALUES VARY SLIGHTLY FROM THOSE SHOWN IN BJRL,

AND IN STATA BECAUSE SLIGHTLY DIFFERENT NUMERICAL ESTIMATION PROCEDURES ARE

USED.)

THE ESTIMATED VARIANCE OF THE MODEL RESIDUALS IS

![]()

AND THE ESTIMATED STANDARD DEVIATION OF THE MODEL RESIDUALS IS

![]()

THE MEAN OF THE RESIDUALS IS .00204. THE t VALUE FOR THIS (FOR DEGREES OF FREEDOM = 128) IS .625. THE STANDARD CHI-SQUARE STATISTIC FOR THE FIRST 30 ESTIMATED AUTOCORRELATIONS OF THE RESIDUALS IS .165 (DEGREES OF FREEDOM = 30). THESE STATISTICS SHOW NO EVIDENCE THAT THE MODEL IS INADEQUATE.

THE PROGRAM OUTPUT INCLUDES A PLOT OF THE 12-AHEAD FORECAST FROM THE LAST OBSERVATION.

3. SUMMARY OF MULTIVARIABLE UNIVARIATE MODELS (TRANSFER FUNCTION MODELS, DISTRIBUTED LAG MODELS)

THE PRECEDING DISCUSSION ADDRESSES MODELS IN WHICH THE STOCHASTIC BEHAVIOR OF AN OBSERVED VARIABLE COULD BE DESCRIBED BY THE PROBABILITY DISTRIBUTION OF A SINGLE RANDOM VARIABLE, I.E., THE MODEL ERROR TERM. SUCH MODELS, INVOLVING A SINGLE RANDOM VARIABLE, ARE CALLED UNIVARIATE MODELS. THE MODEL ERROR TERM IS THE SINGLE INPUT TO THE SYSTEM, AND THE OBSERVED RANDOM VARIABLE IS THE SINGLE OUTPUT, DEPENDENT ON THE INPUT. WE NOW TURN TO CONSIDERATION OF MODELS THAT INVOLVE MORE THAN A SINGLE INPUT, BUT STILL JUST A SINGLE OUTPUT.

FOR THIS SITUATION, THE MODEL INPUTS MAY BE DETERMINISTIC VARIABLES OR RANDOM VARIABLES. THE MODEL INPUTS INCLUDE EXPLANATORY VARIABLES AND A MODEL ERROR TERM. THE MODEL OUTPUT IS AN EXPLAINED VARIABLE, DEPENDENT ON THE EXPLANATORY VARIABLES AND THE MODEL ERROR TERM.

THE KEY POINT HERE IS THAT THE ESSENTIAL STOCHASTIC PROPERTIES OF THE TIME SERIES CAN BE DESCRIBED BY A UNIVARIATE DISTRIBUTION OF THE MODEL ERROR TERM. ALTHOUGH A NUMBER OF RANDOM VARIABLES MAY BE PRESENT IN THE MODEL (E.G., THE EXPLANATORY VARIABLES OF A UNIVARIATE MULTIPLE REGRESSION MODEL), IT IS NOT NECESSARY TO USE A JOINT PROBABILITY DISTRIBUTION (OF MORE THAN ONE COMPONENT) TO DESCRIBE THE STOCHASTIC PROPERTIES OF THE SINGLE RESPONSE VARIABLE OF INTEREST.

FOR THIS SECTION, WE SHALL ASSUME THAT THE MODEL EXPLANATORY VARIABLES ARE EXOGENOUS. THERE ARE A NUMBER OF DEFINITIONS OF THE TERM EXOGENOUS. THEY INVOLVE STATEMENTS ABOUT CONDITIONAL DISTRIBUTIONS OR MODEL ERROR TERMS. FOR THE PURPOSE OF ESTIMATING MODEL PARAMETERS, A VARIABLE IS EXOGENOUS IF KNOWLEDGE OF THE PROCESS GENERATING IT CONTAINS NO INFORMATION ABOUT THE PARAMETERS OF THE DISTRIBUTION OF THE MODEL OUTPUT VARIABLE, CONDITIONAL ON THE EXOGENOUS VARIABLE.

THIS DEFINITION OF EXOGENEITY (INTRODUCED BY R. F. ENGLE, D. F. HENDRY, AND J. F. RICHARD IN 1983) RELATES TO THE SPECIFIC ISSUE OF ESTIMATING CERTAIN PARAMETERS. IT DIFFERS FROM THE USUAL (ECONOMETRIC, MODEL-ERROR-BASED) DEFINITION, INVOLVING COVARIANCES BETWEEN MODEL ERROR TERMS AND MODEL EXPLANATORY VARIABLES.

WHETHER THE CONDITIONS HOLD CANNOT BE DETERMINED FROM ANALYSIS OF DATA. (DATA ANALYSIS MIGHT FALSIFY EXOGENEITY, BUT IT CANNOT ESTABLISH IT.) THEY ARE DETERMINED FROM A CAUSAL MODEL.

EXOGENEITY IS ASSUMED BASED ON THEORETICAL CONSIDERATIONS. WHETHER A VARIABLE IS EXOGENOUS (RELATIVE TO ESTIMATION OF ONE OR MORE MODEL PARAMETERS) IS DETERMINED FROM (OR SPECIFIED IN) A CAUSAL MODEL SHOWING THE CAUSAL RELATIONSHIPS AMONG ALL MODEL VARIABLES.

IF THE MODEL RESIDUALS FOR AN EXPLANATORY VARIABLE ARE INDEPENDENT OF THOSE OF THE EXPLAINED VARIABLE, THEN THE EXPLANATORY VARIABLE IS EXOGENOUS. IT WOULD HOLD, FOR EXAMPLE, IF THE EXPLANATORY VARIABLE WERE GENERATED INDEPENDENTLY OF THE EXPLAINED VARIABLE (E.G., AS IN A COMPUTER SIMULATION OR AS INPUT TO A CONTROLLED EXPERIMENT). THE AMOUNT OF RAINFALL IN AN AREA WOULD EXOGENOUS IN ANY MODEL DEALING WITH ECONOMIC QUANTITIES.

AN EXPLANATORY VARIABLE THAT IS STOCHASTICALLY INDEPENDENT OF THE OTHER MODEL VARIABLES IS EXOGENOUS, BUT REQUIRING EXPLANATORY VARIABLES TO BE INDEPENDENT IS A STRONGER CONDITION THAN IS NECESSARY. THE CONCEPTS OF EXOGENEITY ARE WEAKER THAN INDEPENDENCE.

FOR ADDITIONAL DISCUSSION OF EXOGENEITY, SEE THE FOLLOWING REFERENCES:

BANERJEE, ANINDYA, JUAN DOLADO, JOHN W. GALBRAITH AND DAVID F. HENDRY, CO-INTEGRATION, ERROR CORRECTION, AND THE ECONOMETRIC ANALYSIS OF NON-STATIONARY DATA, OXFORD UNIVERSITY PRESS, 1993

JUDEA PEARL, CAUSALITY: MODELS, REASONING, AND INFERENCE, 2nd ED (CAMBRIDGE UNIVERSITY PRESS, 2009).

HERE FOLLOWS SOME ADDITIONAL INFORMATION ABOUT EXOGENEITY.

ENGLE ET AL. INTRODUCE THREE DIFFERENT LEVELS OF EXOGENEITY, CORRESPONDING TO DIFFERENT ESTIMATION PROBLEMS: INFERENCE, FORECASTING CONDITIONAL ON FORECASTS OF THE EXOGENOUS VARIABLES, AND POLICY ANALYSIS. THESE LEVELS ARE WEAK EXOGENEITY, STRONG EXOGENEITY AND SUPER EXOGENEITY. THE DEFINITIONS OF THESE EXOGENEITY CONCEPTS ARE PRESENTED ON PAGE 18 OF BANERJEE OP. CIT. THEY CORRESPOND TO CONDITIONS ON THE DISTRIBUTION FUNCTIONS INVOLVED IN THE PROBLEM. IF WEAK EXOGENEITY HOLDS, THEN THE MODEL PARAMETERS ARE ESTIMABLE. IF STRONG EXOGENITY HOLDS, THEN FORECASTS MAY BE ESTIMATED CONDITIONAL ON FORECASTED VALUES OF THE EXOGENOUS VARIABLES, ASSUMING THAT THEIR DISTRIBUTION IS UNCHANGED. IF SUPER EXOGENEITY HOLDS, THEN FORECASTS MAY BE MADE CONDITIONAL ON CHANGES IN THE PARAMETERS OF THE DISTRIBUTION OF THE EXOGENOUS VARIABLES.

ENGLE’S CONDITIONS ARE EXPRSSED IN TERMS OF CONDITIONAL DISTRIBUTIONS. THE CONDITIONS ARE COMPLICATED. IT WOULD APPEAR THAT ESTABLISHING EXOGENEITY BY DIRECTLY ESTABLISHING THE VERITY OF THESE CONDITIONS WOULD BE DIFFICULT. A MORE STRAIGHTFORWARD APPROACH WOULD BE TO CONSTRUCT A CAUSAL MODEL DIAGRAM (A DIRECTED ACYCLIC GRAPH) SHOWING THE CAUSAL RELATIONSHIPS AMONG THE MODEL VARIABLES, AND ESTABLISHING WHETHER JUDEA PEARL’S ESTIMABILITY CONDITIONS HELD FOR ESTIMATES OF INTEREST.

BANERJEE (OP. CIT. P 19) COMPARES THE PRECEDING THREE DEFINITIONS OF EXOGENEITY TO THE STANDARD ONES USED IN ECONOMETRIC ANALYSIS: STRICT EXOGENEITY AND PREDETERMINEDNESS. IF ut IS THE MODEL ERROR TERM, THEN A VARIABLE zt IS STRICTLY EXOGENOUS IF E[ztut+i] = 0 FOR ALL i, AND IS PREDETERMINED IF E[ztut+i] = 0 FOR ALL i>=0. ENGLE SHOWS THAT THESE CONDITIONS ARE NEITHER NECESSARY NOR SUFFICIENT FOR VALID INFERENCE, SINCE THEY DO NOT RELATE TO PARAMETERS OF INTEREST.

AMBIGUITY OF THE TERM “MULTIVARIATE”

IT IS RECOGNIZED THAT A UNIVARIATE MODEL CONTAINING EXPLANATORY VARIABLES MAY BE REFERRED TO AS A “MULTIVARIATE” MODEL. IN THE UNIVARIATE MODEL JUST DESCRIBED, THE OUTPUT VARIABLE AND THE EXPLANATORY VARIABLES MAY ALL BE RANDOM VARIABLES, AND THE MODEL COULD REASONABLY BE REFERRED TO AS A MULTIVARIATE MODEL. IN THE CASE IN WHICH THERE IS A SINGLE OUTPUT VARIABLE AND THE EXPLANATORY VARIABLES ARE EXOGENOUS, HOWEVER, SOME OF THE CONCEPTS ARE SIMPLER THAN FOR THE GENERAL MULTIVARIATE CASE (OF MORE THAN ONE OUTPUT VARIABLE). FOR THIS REASON, AND BECAUSE THE UNIVARIATE MODEL IS AN IMPORTANT SPECIAL CASE, IT IS ADDRESSED IN A SEPARATE SECTION.

(IN THIS PRESENTATION, THE TERM “MULTIVARIATE” WILL BE USED TO REFER TO A SITUATION IN WHICH THE PROBABILITY DISTRIBUTION OF INTEREST IS A NON-DEGENERATE (NON-TRIVIAL) ONE, OF DIMENSION GREATER THAN ONE, IN WHICH THE PROBABILITY MASS OCCURS IN MORE THAN ONE DIMENSION. IF THE PROBABILITY DISTRIBUTION OF INTEREST IS ONE-DIMENSIONAL, THEN THE TERM “UNIVARIATE” WILL BE USED. FOR EXAMPLE, A REGRESSION MODEL IN WHICH THERE IS A SINGLE DEPENDENT (EXPLAINED, RESPONSE, OUTPUT) VARIABLE AND SEVERAL EXPLANATORY VARIABLES (WHICH MAY OR MAY NOT BE RANDOM VARIABLES, BUT WHICH ARE NOT CORRELATED WITH THE MODEL ERROR TERM) IS A UNIVARIATE MODEL. SOME AUTHORS SAY THAT A MULTIVARIATE SITUATION IS ONE IN WHICH THE RANDOM VARIABLES OF THE MODEL ARE INTERRELATED. THIS DEFINITION DOES NOT WORK HERE, SINCE IN A REGRESSION MODEL THE DEPENDENT VARIABLE AND THE EXPLANATORY VARIABLES ARE INTERRELATED, BUT A UNIVARIATE PROBABILITY DISTRIBUTION SUFFICES TO DESCRIBE THE ESSENCE OF THE SITUATION. WE REFER TO A UNIVARIATE MODEL INVOLVING EXPLANATORY VARIABLES AS A “MULTIVARIABLE” MODEL (NOT A “MULTIVARIATE” ONE) OR A “MULTIVARIABLE UNIVARIATE” MODEL.)

THE TERM “MULTIPLE TIME SERIES” MAY REFER EITHER TO THE CASE OF A UNIVARIATE MODEL WITH EXPLANATORY VARIATES, OR TO A GENERAL MULTIVARIATE MODEL (AND USUALLY TO THE LATTER).

USING THE STANDARD METHODS OF ESTIMATION, SUCH AS LEAST SQUARES OR MAXIMUM LIKELIHOOD FOR A NORMAL DISTRIBUTION, THE NUMERICAL VALUES OF ESTIMATES OF INTEREST ARE THE SAME, WHETHER THE MODEL IS UNIVARIATE MODEL WITH EXOGENOUS EXPLANATORY VARIABLES, OR A GENERAL MULTIVARIATE MODEL. WHAT DIFFERS IS THE INTERPRETATION OF VARIOUS QUANTITIES, AND THEIR SAMPLING DISTRIBUTIONS (AND RELATED QUANTITIES, SUCH AS PROPERTIES OF TESTS OF HYPOTHESES AND CONFIDENCE INTERVALS). FOR EXAMPLE, IF THE EXPLANATORY VARIABLE IN A UNIVARIATE MODEL IS A RANDOM VARIABLE, THEN IT MAKES SENSE TO REFER TO THE COVARIANCE OF THAT VARIABLE WITH OTHER VARIABLES, AND A SUM OF CROSS PRODUCTS IS AN ESTIMATOR OF THE COVARIANCE, AND IT HAS A SAMPLING DISTRIBUTION. OTHERWISE, IT IS SIMPLY A SUM OF CROSS PRODUCTS (NOT AN ESTIMATE OF A DISTRIBUTION PARAMETER OR MOMENT). FOR SIMPLICITY OF EXPOSITION, A SUM OF CROSS PRODUCTS MAY BE REFERRED TO AS A COVARIANCE, WHETHER IT INVOLVES RANDOM VARIABLES OR NOT.

UNIVARIATE TIME SERIES MODELS WITH EXOGENOUS VARIABLES ARE VARIOUSLY REFERRED TO AS TRANSFER-FUNCTION MODELS OR DISTRIBUTED-LAG MODELS OR MODELS WITH EXOGENOUS VARIABLES. THE TERM “TRANSFER FUNCTION” IS USUALLY USED IN ENGINEERING APPLICATIONS AND THE TERM “DISTRIBUTED LAG” IN ECONOMIC APPLICATIONS. WE SHALL USE THE TERM “TRANSFER FUNCTION.”

UNIVARIATE BOX-JENKINS TYPE MODELS CONTAINING EXOGENOUS EXPLANATORY VARIABLES ARE SOMETIMES REFERRED TO AS ARIMAX MODELS (ARIMA PLUS “X” FOR EXOGENOUS).

TO SIMPLIFY THIS PRESENTATION, WE SHALL IN THIS SECTION SUMMARIZE SOME FEATURES OF MULTIVARIABLE UNIVARIATE TIME SERIES MODELS, BUT DEFER DETAILED DISCUSSION OF IDENTIFICATION AND ESTIMATION PROCEDURES TO THE GENERAL MULTIVARIATE CASE. TRANSFER FUNCTION MODELS MAY BE REPRESENTED AS SPECIAL CASES OF GENERAL MULTIVARIATE MODELS; THIS WILL BE DISCUSSED IN THE SECTION ON MULTIVARIATE MODELS.

THE RELATIONSHIP OF TRANSFER FUNCTION MODELS TO GENERAL MULTIVARIATE MODELS IS SOMEWHAT ANALOGOUS TO THE SITUATION IN ANALYSIS OF VARIANCE OR REGRESSION ANALYSIS, WHERE THE EXPLANATORY VARIABLES (“EFFECTS”) OF A MODEL MAY BE FIXED OR RANDOM. THE ESTIMATES OF THE EFFECTS ARE THE SAME IN EITHER CASE, BUT THE DISTRIBUTIONAL CHARACTERISTICS (AND TESTS OF HYPOTHESES AND CONFIDENCE INTERVALS) DIFFER. IN ENGINEERING APPLICATIONS, IT IS OFTEN POSSIBLE TO CONTROL THE LEVELS OF EXPLANATORY VARIABLES, AS IN A DESIGNED LABORATORY EXPERIMENT OR TESTING OF AN ELECTRONIC FILTER. IN SUCH APPLICATIONS, THE EXPLANATORY VARIABLE MAY OR MAY NOT BE A RANDOM VARIABLE. IN ECONOMIC APPLICATIONS, MANY VARIABLES MAY BE OBSERVED, BUT FEW ARE CONTROLLED. IN ECONOMIC APPLICATIONS, ATTENTION HENCE FOCUSES ON THE CASE IN WHICH THE EXPLANATORY VARIABLES ARE RANDOM VARIABLES. (IF THEY ARE FIXED, THEN THERE IS NO CORRELATION BETWEEN THEM AND THE MODEL ERROR TERM, AND THE LEAST-SQUARES PROCEDURE PRODUCES UNBIASED ESTIMATES. IF THEY ARE RANDOM, IT IS ESSENTIAL THAT THEY NOT BE CORRELATED WITH THE MODEL ERROR TERMS, OR ELSE THE PARAMETER ESTIMATES MAY BE BIASED.)

A DISCRETE LINEAR TRANSFER FUNCTION MODEL

THE FOLLOWING IS A REPRESENTATION OF A GENERAL CLASS OF TRANSFER-FUNCTION MODELS. SEE BJRL FOR DETAILS.

SUPPOSE THAT THE SYSTEM OUTPUT, Yt, IS RELATED TO THE SYSTEM INPUT, Xt, BY THE EQUATION

![]()

OR

![]()

WHERE THE MODEL ERROR TERM, Nt, IS INDEPENDENT OF THE INPUT, Xt. IT MAY BE FURTHER ASSUMED THAT THE MODEL ERROR TERM (OR NOISE) MAY BE REPRESENTED BY AN ARIMA PROCESS:

![]()

WHERE at IS A WHITE NOISE SEQUENCE.

IN GENERAL, THE INPUT Xt MAY BE ANY SEQUENCE OF NUMBERS, E.G., LEVELS IN A DESIGNED EXPERIMENT, OR A SINE WAVE. FOR THIS PRESENTATION, WE SHALL ASSUME, UNLESS OTHERWISE STATED, THAT THE INPUT Xt IS A STOCHASTIC PROCESS.

THE OPERATOR

![]()

IS CALLED THE TRANSFER FUNCTION OF THE PROCESS. THE WEIGHTS ν0, ν1, … ARE CALLED THE IMPULSE RESPONSE FUNCTION OF THE PROCESS.

NOTE THAT IT IS NOT REASONABLE TO PARAMETERIZE THE PROCESS IN TERMS OF THE ν’s. IN GENERAL, THAT WOULD BE A VERY NON-PARSIMONIOUS REPRESENTATION. FOR MANY PROCESSES THAT ARE REPRESENTED BY A SMALL NUMBER OF PARAMETERS (φs AND θs), THE ν’s ARE FUNCTIONALLY RELATED. ESTIMATES OF A LARGE NUMBER OF FUNCTIONALLY RELATED PARAMETERS (CONSIDERED FUNCTIONALLY INDEPENDENT) WOULD BE INEFFICIENT AND UNSTABLE.

IF THE INPUT Xt IS HELD INDEFINITELY AT THE VALUE OF ONE, Yt EVENTUALLY ATTAINS THE VALUE

![]()

WHICH IS CALLED THE STEADY-STATE GAIN OF THE PROCESS.

FOR STABILITY OF THE PROCESS, IT IS REQUIRED THAT THE ROOTS OF THE POLYNOMIAL δ(B) = 0 LIE OUTSIDE THE UNIT CIRCLE. (THIS IS EQUIVALENT TO REQUIRING THAT THE SERIES v(B) CONVERGE FOR |B|≤1.) WE SHALL ASSUME THAT THIS CONDITION HOLDS.

FEATURES OF TRANSFER FUNCTION MODELS.

IMPULSE RESPONSE AND STEP RESPONSE FUNCTIONS

JUST AS ARIMA PROCESSES WERE CHARACTERIZED BY THEIR AUTOCORRELATION AND PARTIAL AUTOCORRELATION FUNCTIONS, TRANSFER FUNCTIONS ARE CHARACTERIZED BY THEIR RESPONSE TO IMPULSE AND STEP INPUTS.

AN IMPULSE INPUT IS DEFINED AS AN INPUT X0 = 1 and Xt = 0 FOR t ≠ 0. THE RESPONSES TO THIS INPUT ARE GIVEN BY THE IMPULSE RESPONSE FUNCTION.

A STEP INPUT IS DEFINED AS AN INPUT OF Xt = 1 IF t ≥ 0 AND Xt =0 IF t < 0. THE RESPONSE OF THE SYSTEM TO A STEP INPUT IS CALLED THE STEP RESPONSE FUNCTION.

FOR A SPECIFIED (TRUE, THEORETICAL, POPULATION) MODEL, THE IMPULSE RESPONSE FUNCTION AND THE STEP RESPONSE FUNCTIONS MAY BE DERIVED.

[INSERT EXAMPLE OF IMPULSE RESPONSE FUNCTION AND STEP RESPONSE FUNCTION.]

CROSS-COVARIANCE AND CROSS-CORRELATION FUNCTIONS

A STATIONARY UNIVARIATE TIME SERIES IS CHARACTERIZED BY THE MEAN, VARIANCE, AND AUTOCOVARIANCE (OR AUTOCORRELATION) FUNCTIONS. SIMILARLY, STATIONARY MULTIVARIATE TIME SERIES (VECTOR STOCHASTIC PROCESSES) ARE CHARACTERIZED BY THE MEANS, VARIANCES AND COVARIANCES OF THE COMPONENT RANDOM VARIABLES. (THE TERM “COMPONENT” REFERS TO ONE OF THE COMPONENTS OF THE MULTIVARIATE RESPONSE VECTOR.)

FOR EASE OF DISCUSSION, WE SHALL RESTRICT DISCUSSION TO THE BIVARIATE CASE, IN WHICH THERE IS A SINGLE EXOGENOUS VARIATE, Xt, AND A SINGLE RESPONSE (OUTPUT) VARIABLE, Yt. (RECALL THAT WE ARE ASSUMING, UNLESS OTHERWISE STATED, THAT Xt IS A STOCHASTIC PROCESS.) WE SHALL ASSUME THAT THESE PROCESSES ARE STATIONARY. IN THIS CASE, THE (STATIONARY) BIVARIATE TIME SERIES IS CHARACTERIZED BY THE MEANS µx AND µy, VARIANCES σ2x AND σ2y, THE COVARIANCE FUNCTION DEFINED BY

![]()

![]()

AND THE CROSS-COVARIANCE FUNCTIONS DEFINED BY

![]()

![]()

FOR A STATIONARY SERIES, THE CROSS-COVARIANCE FUNCTIONS ARE THE SAME FOR ALL t.

IN GENERAL, ![]() IS NOT EQUAL TO

IS NOT EQUAL TO ![]() .

.

SINCE

![]()

IT SUFFICES TO DEFINE JUST

ONE CROSS-COVARIANCE FUNCTION ![]() FOR k = 0,

FOR k = 0, ![]()

THE QUANTITY

![]()

IS CALLED THE CROSS-CORRELATION COEFFICIENT AT LAG k, AND THE FUNCTION

![]()